- Home

- /

- SAS Communities Library

- /

- SAS data format for Hadoop is open to open source

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

SAS data format for Hadoop is open to open source

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In a recent article we learned how to create an SAS Scalable Performanc

In this example, we will create two uncompressed SPD Engine tables stored on HDFS. We’ll then register those tables to the Hive metastore and join them together to create a Hive target table.

Code to create the two SPD Engine tables:

59 +LIBNAME SPDEHDFS SPDE '/user/sasss1/spde' HDFSHOST=default ACCELWHERE=YES

PARALLELWRITE=YES PARALLELREAD=YES;

NOTE: Libref SPDEHDFS was successfully assigned as follows:

Engine: SPDE

Physical Name: /user/sasss1/spde/

66 +DATA SPDEHDFS.PLAYERS;

67 + KEEP name team;

68 + SET sashelp.baseball;

69 +RUN;

NOTE: There were 322 observations read from the data set SASHELP.BASEBALL.

NOTE: The data set SPDEHDFS.PLAYERS has 322 observations and 2 variables.

NOTE: DATA statement used (Total process time):

real time 1.75 seconds

cpu time 0.16 seconds

70 +

71 +DATA SPDEHDFS.STATS;

72 + KEEP name nhits nruns;

73 + SET sashelp.baseball;

74 +RUN;

NOTE: There were 322 observations read from the data set SASHELP.BASEBALL.

NOTE: The data set SPDEHDFS.STATS has 322 observations and 3 variables.

NOTE: DATA statement used (Total process time):

real time 1.38 seconds

cpu time 0.15 seconds

For SAS Administrators:

Hive SerDe for SPD Engine Data

In the third maintenance release for SAS 9.4, SAS provides a custom Hive SerDe for SPD Engine Data that is stored in HDFS. The SerDe makes the data available for applications outside of SAS to query.

The following are required to access SPD Engine tables using the SPD Engine SerDe:

- You must deploy SAS Foundation using the SAS Deployment Wizard. Select SAS Hive SerDe for SPDE Data.

You must be running a supported Hadoop distribution that includes Hive 0.13:

- Cloudera CDH 5.2 or later

- Hortonworks HDP 2.1 or later

- MapR 4.0.2 or later

The following table features are not supported:

- Compressed or encrypted tables

- Tables with SAS informats

- Tables that have user-defined formats

- Password-protected tables

- Tables owned by the SAS Scalable Performance Data Server

In addition, the following processing functionality is not supported by the SerDe and requires processing by the SPD Engine:

- Write, Update, and Append operations

- If preserving observation order is required.

On the Hadoop head node

To register the SPD Engine tables to the Hive metastore we need to append to the HADOOP_CLASSPATH the SAS_HADOOP_CONFIG_PATH, as well as the directory paths for the Hive JARs and XMLs files:

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$SAS_HADOOP_CONFIG_PATH:opt/cloudera/parcels/CDH/lib/hive/lib/*:/opt/cloudera/paels/CDH/lib/hadoop-mapreduce/lib/*

In the following two examples, we are registering the SPD Engine tables “players and stats” to the Hive “sasss1” database. The following commands executes the SerDe JAR files and registers the SPD Engine tables to the Hive metastore:

hadoop jar /opt/cloudera/parcels/CDH/lib/hive/lib/sas.HiveSerdeSPDE.jar com.sas.hadoop.serde.sp.hive.MetastoreRegistration -table players -mdflocation /user/sasss1/spde -database sasss1

hadoop jar /opt/cloudera/parcels/CDH/lib/hive/lib/sas.HiveSerdeSPDE.jar com.sas.hadoop.serde.sp.hive.MetastoreRegistration -table stats -mdflocation /user/sasss1/spde -database sasss1

When registering the tables to the Hive metastore the following options are also supported:

-database database_name to specifies a Hive metastore database if you are not using the default database.

-mdflocation is the path on HDFS that we used on our SPD Engine LIBNAME.

-renametable table-name to assigns a different name to the table stored in HDFS.

-owner owner-name that assigns an owner name. The default owner name is Anonymous.

For SAS Programmers:

In this example we will create a new Hive table by joining two SPD Engine tables in the MapReduce framework by using the two registered table in the Hive metastore:

58 +/* HIVE LIBREF */

59 +LIBNAME HIVE HADOOP PORT=10000 TRANSCODE_FAILl=warning

HOST="Hadoop.Head.Node" DATABASE=sasss1 USER=sasss1;

NOTE: Libref HIVE was successfully assigned as follows:

Engine: HADOOP

Physical Name: jdbc:hive2://Hadoop,head.node.com:10000/sasss1

102 +PROC SQL;

103 + CREATE TABLE HIVE.PLAYER_STATS AS

104 + SELECT t1.Name,

105 + t1.Team,

106 + t2.nHits,

107 + t2.nRuns

108 + FROM HIVE.PLAYERS t1

109 + INNER JOIN HIVE.STATS t2 ON (t1.Name = t2.Name);

NOTE: SAS variable labels, formats, and lengths are not written to DBMS tables.

NOTE: Table HIVE.PLAYER_STATS created, with 322 rows and 4 columns.

HADOOP_23: Executed: on connection 4

DROP TABLE sasdata_17_43_43_325_00001

Summary Statistics for HADOOP are:

Total SQL execution seconds were: 0.137603

Total SQL prepare seconds were: 16.867544

Total SQL describe seconds were: 0.002308

Total seconds used by the HADOOP ACCESS engine were 19.764125

110 +QUIT;

NOTE: PROCEDURE SQL used (Total process time):

real time 20.61 seconds

cpu time 0.17 seconds

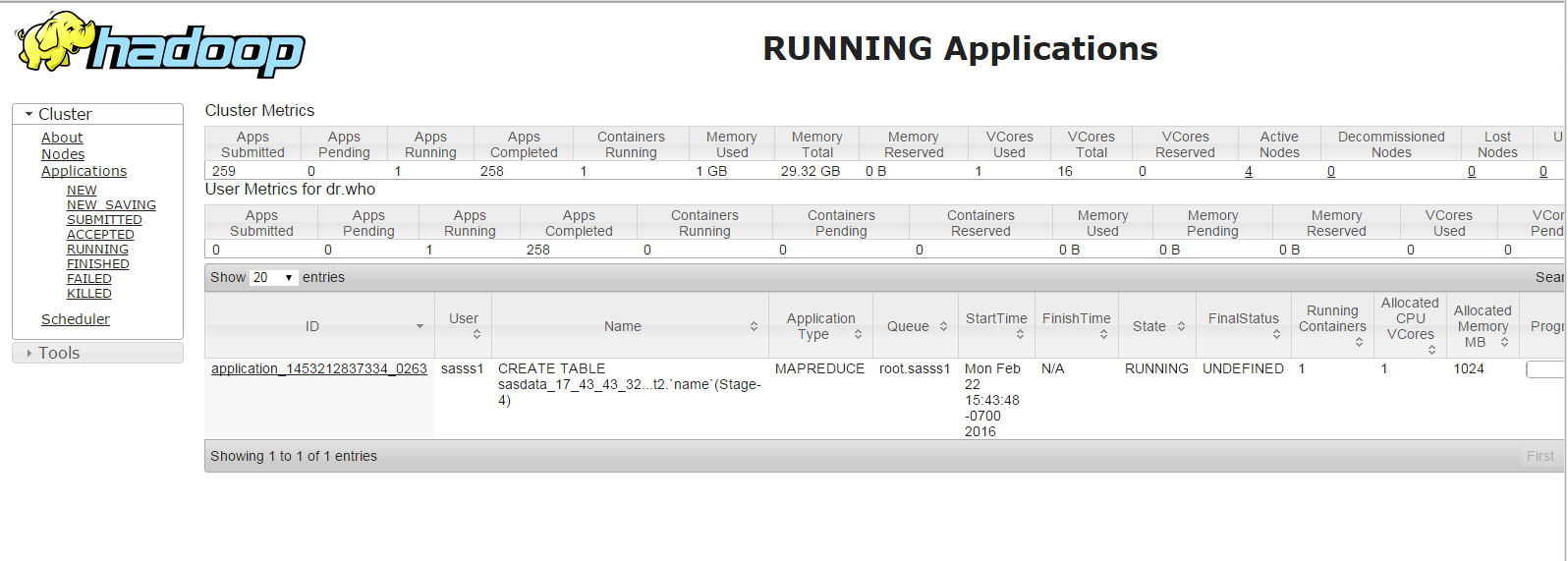

As we can see from Figure 1, the above join is using MapReduce to accomplish the join:

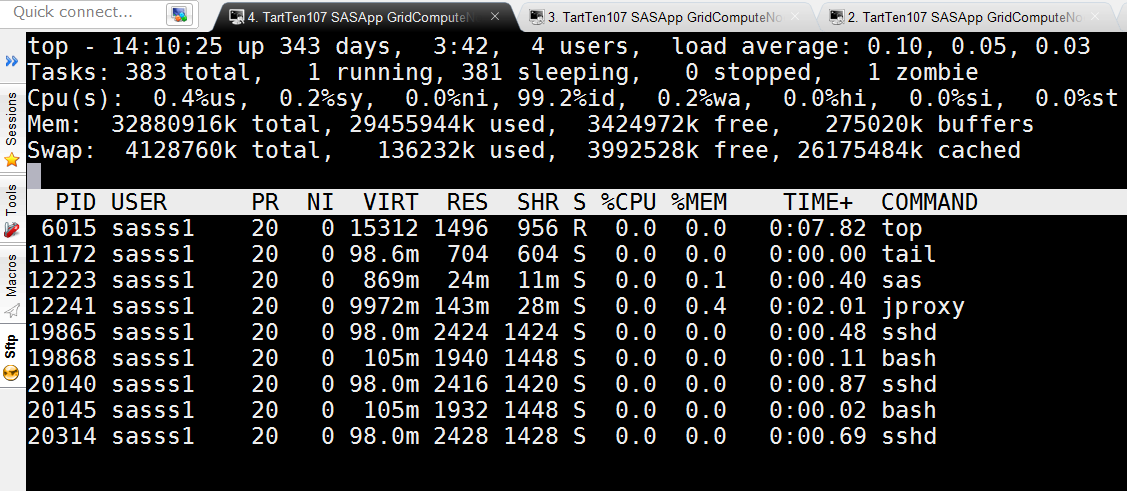

Notice in Figure 2 the SAS Server that submitted this code is not consuming resource:

Figure 2. Top command, notice SAS and JPROXY are not consuming resources

Non-SAS clients

Once the SPD Engine tables are register to the Hive meatastore any non-SAS tool used to process Hive data can also process (read only) the SPD Engine data using HiveQL.

Don't miss out on SAS Innovate - Register now for the FREE Livestream!

Can't make it to Vegas? No problem! Watch our general sessions LIVE or on-demand starting April 17th. Hear from SAS execs, best-selling author Adam Grant, Hot Ones host Sean Evans, top tech journalist Kara Swisher, AI expert Cassie Kozyrkov, and the mind-blowing dance crew iLuminate! Plus, get access to over 20 breakout sessions.

Free course: Data Literacy Essentials

Data Literacy is for all, even absolute beginners. Jump on board with this free e-learning and boost your career prospects.

Get Started

- Find more articles tagged with:

- SAS Data Management for Hadoop