- Home

- /

- SAS Communities Library

- /

- SAS Viya 3.2 CAS Kerberos Out-bound with Remote HDFS

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

SAS Viya 3.2 CAS Kerberos Out-bound with Remote HDFS

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

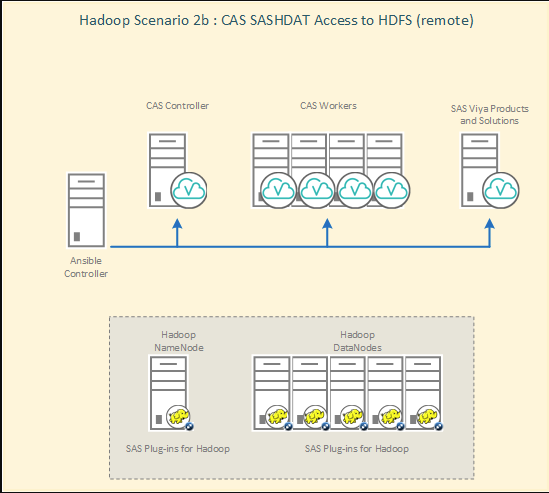

As a follow-on from my previous post, where we looked at CAS with Kerberos Out-bound to Hadoop, in this post I want to explore what is required for SAS Cloud Analytic Services to use Kerberos to connect to a remote HDFS instance.

Specifically, in this post I want to focus the scenario where SAS Cloud Analytic Services is separate to the Hadoop cluster, but where SASHDAT files are stored in the remote instance of HDFS. As illustrated here:

Click image to see a larger version

The key requirement for access to the remote Hadoop instance is the use of Secure Shell (SSH) between the SAS Cloud Analytic Services nodes and the Hadoop nodes.

Most the setup for the SAS Cloud Analytic Services side of the picture is essentially the same as before. That is the information we covered in the previous post. However, there are some minor differences and we will examine those in this post.

Prerequisites

As with any Kerberos setup the prerequisites are key to obtaining the correct behavior.

Kerberos Principal for CAS

A Kerberos principal is required for the SAS Cloud Analytic Services. With SAS Viya 3.2 this principal must be in the format of a Service Principal Name (SPN), <Service Class>/<Fully Qualified Hostname>@<Realm>. Where the default Service Class is "sascas". A different Service Class can be used if required.

With an Active Directory Kerberos Key Distribution Center (KDC); the User Principal Name (UPN) for the user must be the same as the SPN. This is due to Active Directory only allowing the initialization of a Kerberos Ticket-Granting Ticket for a UPN. The same restriction does not apply for other Kerberos distributions, e.g. MIT Kerberos, since these do not distinguish between a UPN and SPN and merely have principal names.

Kerberos Keytab for CAS

A Kerberos Keytab is required for the principal to be used by SAS Cloud Analytic Services. This contains the long-term keys for the principal. This file must be available on the CAS Controller for the operating system account running the CAS Controller process, by default this is the cas account. The default location and file name is /etc/sascas.keytab, however this can be changed if necessary.

SSH Configuration

SAS Cloud Analytic Services with remote Hadoop is dependent on SSH for the connection between the SAS Cloud Analytic Services nodes and the Hadoop nodes. When the Hadoop cluster has been secured with Kerberos, this SSH connection must be made using the GSSAPI and hence Kerberos. There are several prerequisites to configuring GSSAPI authentication for SSH.

Enabling GSSAPI

The use of the GSSAPI and hence Kerberos must be performed on both the SSH client and SSH server. For the SSH server update the /etc/ssh/sshd_config and ensure the property GSSAPIAuthentication is set to yes, this is the default value. For the SSH client update the /etc/ssh/ssh_config and ensure the property GSSAPIAuthentication is set to yes, the default is no. For our case the SSH client will be the SAS Cloud Analytic Services nodes and the SSH server will be the Hadoop nodes.

Additional options are available for the SSH client, these are not required for the remote access to Hadoop. An example of the additional options is GSSAPIDelegateCredentials, which is not required.

The SSH client options should be specified in a Host definition. This ensures the settings are only used when connecting to a specific set of hosts. The Host definition restricts the following declarations (up to the next Host key-word) to be only for those hosts that match one of the patterns given after the keyword. If more than one pattern is provided, they should be separated by whitespace. A single '*' as a pattern can be used to provide global defaults for all hosts. The host is the hostname argument given on the command line (i.e. the name is not converted to a canonicalized host name before matching). For example to enable GSSAPI for all hosts whose hostname ends "myhadoop.cluster.company.com" we would use the following in the /etc/ssh/ssh_config file:

Host *.myhadoop.cluster.company.com

GSSAPIAuthentication yes

Host Principals

To use the GSSAPI for SSH the end-user must be able to obtain a service ticket to connect to the remote machine. The SSH client will request a service ticket for host/<Fully Qualified Hostname>. Therefore, the host Service Principal Name must be registered in the KDC.

The SSH Daemon will check the operating system default keytab for a long-term key associated with the Host principal. The default keytab on Linux is /etc/krb5.keytab. Therefore, this Kerberos Keytab must contain the long-term keys for the Host principal on the Hadoop nodes.

By default, the SSH Daemon will only validate Service Tickets where the SPN matches the host’s current hostname. As such, in multi-homed or systems using a DNS alias the SSH connection will fail. The SSH Daemon can be configured to validate the Service Ticket using any value within the default Kerberos Keytab. To enable the SSH Daemon to use any value in the Kerberos Keytab the property GSSAPIStrictAcceptorCheck must be set to no in /etc/ssh/sshd_config.

Java Requirements

Hadoop and the interface used by SAS Cloud Analytic Services is based on Java. Therefore, there are a couple of Java requirements for the configuration to operate correctly.

JAVA_HOME

The script that will be called by the remote connection from the SAS Cloud Analytic Services hosts requires the JAVA_HOME environment variable be correctly set for the Hadoop cluster. The script that is called will run $HADOOP_HOME/bin/hadoop and this script must have a correctly defined value for JAVA_HOME. Most Hadoop distributions will define the JAVA_HOME environment variable in $HADOOP_HOME/libexec/hadoop-config.sh. Therefore, it is important that this script is validated to ensure the correct value of JAVA_HOME is set.

Encryption Strength

The encryption strength used in Kerberos tickets is variable. If the Kerberos tickets are using AES 256-bit encryption, then the Java Unlimited Strength Policy files will be required in the Hadoop cluster. The Hadoop distributions documentation should be used to ensure the policy files are correctly deployed.

Remote HDFS Configuration for CAS

To enable SAS Cloud Analytic Services to correctly operate with a remote Hadoop cluster the following items need to be configured.

The cas.settings file

The cas.settings file located in /opt/sas/viya/home/SASFoundation requires four additional properties to enable the use of SASHDAT files. Add the following options to the bottom of the file:

export JAVA_HOME=<PATH TO JRE Directory> export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$JAVA_HOME/lib/amd64/server export HADOOP_HOME='<PATH TO HADOOP_HOME>' export HADOOP_NAMENODE=<name node fully qualified hostname>

Ensure the file is updated on all SAS Cloud Analytic Services nodes.

Where the HADOOP_HOME will be specific to the Hadoop distribution used. For example, with Cloudera it would be '/opt/cloudera/parcels/CDH/lib/hadoop'.

The same properties can also be placed in the casconfig.lua file rather than the cas.settings file. To place them in the casconfig.lua remove export and add "env.", for example env.JAVA_HOME=<PATH TO JRE Directory>.

The casconfig.lua file

The casconfig.lua file located in /opt/sas/viya/config/etc/cas/default requires two additional properties to enable remote access to HDFS. Add the following two options to the bottom of the file:

env.CAS_ENABLE_REMOTE_SAVE = 1 env.CAS_REMOTE_HADOOP_PATH = '<PATH TO SAS HDAT PLUGINS BIN>'

Ensure the file is updated on all SAS Cloud Analytic Services nodes.

Where the CAS_REMOTE_HADOOP_PATH is the directory where the SAS HDAT Plugin binaries and scripts are located. For example, with Cloudera it would be '/opt/cloudera/parcels/CDH/lib/hadoop/bin'.

Playbook Changes

The changes required for the cas.settings and casconfig.lua can be made prior to the deployment, or after the initial deployment. Whenever the changes are made the changes should be reflected in the playbook files to ensure such changes are maintained if the playbook is ever run again. The changes can be made in the vars.yml file.

To make the changes detailed in the previous two sections within the vars.yml find the section CAS_SETTINGS and add the following to the end of the examples:

CAS_SETTINGS: 1: JAVA_HOME=<PATH TO JRE Directory> 2: LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$JAVA_HOME/lib/amd64/server 3: HADOOP_HOME='<PATH TO HADOOP_HOME>' 4: HADOOP_NAMENODE=<name node>

Where the HADOOP_HOME will be specific to the Hadoop distribution used. For example, with Cloudera it would be '/opt/cloudera/parcels/CDH/lib/hadoop'

Then find the section CAS_CONFIGURATION and add the following:

CAS_CONFIGURATION:

env:

CAS_ENABLE_REMOTE_SAVE: 1

CAS_REMOTE_HADOOP_PATH: '<PATH TO SAS HDAT PLUGINS BIN>'

cfg:

colocation: 'hdfs'

mode: 'mpp'

Where the CAS_REMOTE_HADOOP_PATH is the directory where the SAS HDAT Plugin binaries and scripts are located. For example, with Cloudera and a manual deployment of the HDAT Plugins it would be '/opt/cloudera/parcels/CDH/lib/hadoop/bin'

Kerberos for Visual Interfaces

Standard Setup

To enable Kerberos outbound authentication for SAS Cloud Analytic Services the casconfig.lua must be updated. This update will ensure that the CAS Controller correctly initializes a Kerberos Ticket-Granting Ticket.

The casconfig.lua located in /opt/sas/viya/config/etc/cas/default should have the following changes made:

- Update the value of cas.provlist to include initkerb, the completed line will look like the following:

cas.provlist = 'oauth.ext.initkerb'

- Add environment variables, if required, to change the Service Class and keytab location with the following:

-- Add Env Variable for SPN env.CAS_SERVER_PRINCIPAL = 'CAS/HOSTNAME.COMPANY.COM' -- Add Env Variable for keytab location env.KRB5_KTNAME = '/opt/sas/cas.keytab'

These changes can then be reflected in the vars.yml within the playbook by adding the following to the CAS_CONFIGURATION section:

CAS_CONFIGURATION:

env:

CAS_SERVER_PRINCIPAL: 'CAS/HOSTNAME.COMPANY.COM'

KRB5_KTNAME: '/opt/sas/cas.keytab'

Remember from the previous post, that we recommend only configuring Kerberos post-deployment. Attempts to set the cas.provlist as part of the deployment will fail.

Remote Specific

The next concern is specific to remote access to HDFS. We need to ensure the user account used by the Visual Interfaces can make a SSH connection using the GSSAPI. Since the Kerberos Ticket-Granting Ticket will be obtained for the principal <Service Class>/<Hostname> we need to ensure this is correctly mapped to a real user account on the Hadoop nodes. Otherwise the SSH connection using GSSAPI will fail.

Note: This does not change the user account that will access HDFS only the user account connecting with SSH

The mapping can be defined in the Kerberos configuration file on the Hadoop cluster. This will need to be updated on all nodes. This can either be managed by the Hadoop distribution or managed manually by updating the /etc/krb5.conf.

Within the Kerberos configuration file, for example /etc/krb5.conf, locate the REALM definition and add the following:

auth_to_local_names = {

sascas/<hostname> = cas

}

This will ensure for the SSH connection the Kerberos principal name is correctly mapped to the required operating system user.

Kerberos for Programming Interfaces

To make use of remote HDFS from the Programming Interfaces, such as SAS Studio, we do not need to complete any additional specific steps. The users should already be obtaining a Kerberos Ticket-Granting Ticket (TGT) as part of the Pluggable Authentication Module authentication carried out by the CAS Controller. With this TGT available to the CAS Controller it should be possible with the prerequisites complete above, to make a SSH connection using the GSSAPI.

Validating

With all the preceding steps complete it should now be possible to access the remote Secured Hadoop cluster to read/write SAS HDAT files.

Validate from SAS Studio

To validate from SAS Studio you can use the following code:

/* start session */ cas mysession; /* assign caslib */ caslib testhdat datasource=(srctype="hdfs") path="/tmp"; /* load sashelp.heart */ proc casutil; load data=sashelp.heart; run; /* save test */ proc casutil; save casdata="heart" replace; run; /* load test */ proc casutil; load casdata="heart.sashdat" outcaslib="casuserhdfs" casout="workingheart"; run; /* end session */ cas mysession terminate;

You will need to log into SAS Studio using an end-user who has valid Kerberos credentials and can write to /tmp in HDFS.

You should be able to validate the SAS HDAT file exists in HDFS with the Hadoop command-line utilities. For example:

hadoop fs -ls /tmp

Will show:

Found 4 items drwxrwxrwx - hdfs supergroup 0 2017-08-01 05:34 /tmp/.cloudera_health_monitoring_canary_files -rw-rw-rw- 1 sasadm supergroup 1170432 2017-08-01 05:28 /tmp/heart.sashdat drwx-wx-wx - hive supergroup 0 2017-05-24 09:15 /tmp/hive drwxrwxrwt - mapred hadoop 0 2017-05-24 10:21 /tmp/logs

Validating from SAS Environment Manager

To validate from SAS Environment Manager, we will attempt a couple of different tasks. These will require some content to be created in HDFS. First, we need to create a HDFS directory for the end-user accessing SAS Environment Manager, we will use a member of SAS Administrators since the authorization is out of scope for what we are testing. You can use the Hadoop command-line tools to create this directory for the end-user, here we will use an end-user called sasadm:

hadoop fs -mkdir /user/sasadm; hadoop fs -chown sasadm /user/sasadm; hadoop fs -chmod 755 /user/sasadm

Next, we will create a directory that is only accessible to the sascas user:

hadoop fs -mkdir /tmp/sascas; hadoop fs -chown sascas /tmp/sascas; hadoop fs -chmod 700 /tmp/sascas

Finally, we will copy the SAS HDAT file created from SAS Studio into these directories:

hadoop fs -cp /tmp/heart.sashdat /user/sasadm; hadoop fs -cp /tmp/heart.sashdat /tmp/sascas; hadoop fs -chown sasadm /user/sasadm/heart.sashdat; hadoop fs -chown sascas /tmp/sascas/heart.sashdat; hadoop fs -chmod 600 /user/sasadm/heart.sashdat; hadoop fs -chmod 600 /tmp/sascas/heart.sashdat

These commands will ensure the files are only readable by the owner of the directory.

Then log into SAS Environment Manager as the end-user (sasadm in our case). Select Data and then Libraries. First check the content of CASUSERHDFS(sasadm), which is the /user/sasadm directory in HDFS. Just double-click on the library in SAS Environment Manager. While we can see the content of the library we cannot see the table. This is since we are connecting to HDFS as the sascas user and not the sasadm user.

Return to the library list and select New Library. Change the data source type to HDFS, enter /tmp/sascas as the path and SASCAS as the name. Select Save. Double-click the new SASCAS library and this time the HEART table will be shown. Right click on HEART and select Load. The table should then be loaded into SAS Cloud Analytic Services, the row count should be 1,000 and the column count should be 17. Right click on HEART and select unload, select Yes in the confirmation dialog.

Cleaning Up

To clean-up in SAS Environment Manager return to the library list right click SASCAS and select Delete. Select Yes in the confirmation dialog.

To clean-up in HDFS run the following commands as the Hadoop super user:

hadoop fs -rm /tmp/heart.sashdat; hadoop fs -rm /tmp/sascas/heart.sashdat; hadoop fs -rm /user/sasadm/heart.sashdat; hadoop fs -rmdir /tmp/sascas

This will remove the three copies of the HEART table and the directory for sascas.

Stuart Rogers

Don't miss out on SAS Innovate - Register now for the FREE Livestream!

Can't make it to Vegas? No problem! Watch our general sessions LIVE or on-demand starting April 17th. Hear from SAS execs, best-selling author Adam Grant, Hot Ones host Sean Evans, top tech journalist Kara Swisher, AI expert Cassie Kozyrkov, and the mind-blowing dance crew iLuminate! Plus, get access to over 20 breakout sessions.

Free course: Data Literacy Essentials

Data Literacy is for all, even absolute beginners. Jump on board with this free e-learning and boost your career prospects.

Get Started

- Find more articles tagged with:

- GEL