- Home

- /

- SAS Communities Library

- /

- Quick tips for setting your Gradient Boosting node properties in SAS® ...

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Quick tips for setting your Gradient Boosting node properties in SAS® Enterprise Miner™

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The Gradient Boosting node is on the Model tab of the SAS Enterprise Miner Toolbar for training a gradient boosting model, a model created by a sequence of decision trees that together form a single predictive model. A tree in the sequence is fit to the residuals of the predictions from the earlier trees in the sequence. The residuals are calculated in terms of the derivative of a loss function. The resulting ensemble model that averages together the predictions from the decision trees often outperforms (in terms of prediction accuracy) other machine learning algorithms, making gradient boosting extremely popular.

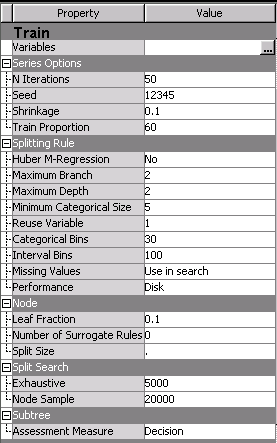

With this modeling algorithm comes several hyperparameters. When training a gradient boosting model in SAS Enterprise Miner, some of these hyperparameters are exposed to you as properties of the Gradient Boosting node, and of course, their optimal values are data dependent. The hyperparameters for a gradient boosting model can be divided into categories: those related to growing the decision trees (primarily in the Splitting Rule, Node, and Split Search property groups) and those related to the boosting process (primarily in the Series Options group). Here are recommendations provided by several of my SAS colleagues for how to adjust properties representing the hyperparameters in each of these categories to help you train an accurate but generalizable gradient boosting model. Note that my colleague Brett Wujek posted a similar tip for the HP Forest node that you might find helpful if you are fitting forest models in SAS Enterprise Miner.

Growing the decision trees

Increasing the size of the decision trees used in a gradient boosting model can improve the accuracy of your predictions, though at the risk of overfitting your training data. Here are some properties in the "Splitting Rules" and "Node" sections that you can try tweaking in the following ways to grow larger trees:

- Increase Maximum Depth to 4, 5, or 6.

- Increase Reuse Variable to 2 or more (up to Maximum Depth), so an input can be used multiple times for splitting if it produces the best split.

- Decrease Leaf Fraction to 0.01, 0.005, or 0.001. Often just changing this one property can make a significant difference in your model! NOTE: in SAS Enterprise Miner 15.1, the default for this property is now 0.001.

- For an interval target that is highly skewed, change the Huber M-Regression property to a value other than “No,” representing the threshold value. Residuals are then calculated using a Huber M-regression loss function that is less sensitive to extreme target values than the default, the squared error loss function.

Boosting process

As with the size of the tree, the length of the boosting process can affect your prediction accuracy, but also can introduce overfitting. This makes it important to use a validation partition and check the Subseries Plot in the results to make sure you have a generalizable model. With that in mind, you can try the following modifications to the Gradient Boosting node property values that are in the "Series Options" section:

- Increase N Iterations to values of 100, 500, 1000 or above to include more trees in your boosting series. You can simultaneously decrease the Shrinkage property to values below 0.1, down to 0.001. This controls the shrinkage factor also known as the “learning rate”. The lower shrinkage factor helps offset the overfitting caused by more iterations.

- Increase the Train Proportion property to values such as 70, 80, 90.

One more trick…

The Gradient Boosting node bins interval inputs for creating decision tree splits and surfaces a property Interval Bins to control the number of equal-width bins to use. If you have skewed interval inputs however, it can be better to use other binning methods like quantile binning or tree-based binning (with respect to your target). To bin your skewed interval inputs, you can use either the Interactive Binning node to perform quantile binning or the Transform Variables node to perform quantile or tree-based (“Optimal”) binning prior to running the Gradient Boosting node.

Finally, if you are using SAS Visual Data Mining and Machine Learning on SAS Viya, the GRADBOOST procedure is available for training gradient boosting models. You can access this procedure in SAS Studio either programmatically or through the Gradient Boosting task, or include it in your Model Studio pipeline with the Gradient Boosting node. Whichever way, you have access to the “autotuning” capabilities that help find the optimal values of hyperparameters via a variety of search methods, including grid search and Latin hypercube sample search. You can also incorporate the GRADBOOST procedure with autotuning enabled into your process flow diagram in SAS Enterprise Miner; see my tip on the SAS Viya Code node to see how.

Acknowledgments: thank you to Tobias Kuhn, Padraic Neville, Ralph Abbey, Sanford Gayle, and Lorne Rothman for their input on this post.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

In SAS Enterprise miner Gradient boosting, for a binary target using square loss function and best assesment value = average square error, I get , the scoring code these two variables are initialized _ARB_F = 1.09944342 and _ARBBAD_F = 0

For a interval target I found _ARB_F is initialized mean of my target variable but in the case of binary target how is _ARB_F initialised please help?

Don't miss out on SAS Innovate - Register now for the FREE Livestream!

Can't make it to Vegas? No problem! Watch our general sessions LIVE or on-demand starting April 17th. Hear from SAS execs, best-selling author Adam Grant, Hot Ones host Sean Evans, top tech journalist Kara Swisher, AI expert Cassie Kozyrkov, and the mind-blowing dance crew iLuminate! Plus, get access to over 20 breakout sessions.

Free course: Data Literacy Essentials

Data Literacy is for all, even absolute beginners. Jump on board with this free e-learning and boost your career prospects.