- Home

- /

- SAS Communities Library

- /

- Import Impala tables into LASR with SAS EP

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Import Impala tables into LASR with SAS EP

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

As an Impala user, we ask this question, what is the most efficient way to import tables from Impala to LASR? Can the SAS Embedded Process (EP) be used for this purpose? In this post, I talk about loading tables into LASR from Impala using Impala and a Hadoop (Hive) engine with SAS EP.

Impala is a distributed, Massively Parallel Processing (MPP) SQL query engine that enables users to issue low-latency SQL against data stored in Hive, HDFS, and Apache HBase without requiring data movement or transformation.

Impala executes SQL queries natively without translating them into MapReduce jobs.

Impala and Hive share the same metadata repository, which enables users to read Impala tables from Hive and vice versa.

Considering the shared metadata repository between Impala and Hive, the Impala tables can be read from SAS using both SAS/ACCESS to Impala and SAS/ACCESS to Hadoop (Hive) engines. So, which method is more efficient for importing Impala tables to LASR?

SAS/ACCESS to Impala is an ODBC connection to Impala (Hadoop). It does not support interactions with SAS EP on a Hadoop cluster. One of the reasons that the Impala engine cannot interact with SAS EP is because Impala executes SQL queries natively whereas SAS EP works with MapReduce. When using an Impala engine to read Impala tables in order to load them into LASR, the process is unable to request SAS EP to schedule the MapReduce task that loads into LASR in parallel mode. Hence, the process loads data into LASR using a single channel between NameNode and LASR RootNode.

As Impala shares the metadata repository with Hive, Hive can read Impala tables in the same way as it reads any other Hive table. This feature enables SAS/ACCESS to Hadoop (Hive) engine to read Impala tables and uses the SAS Embedded Process to load into LASR using parallel data feeder threads. Only, HPA PROCs (PROC LASR and HPDS2) can trigger parallel data loads into LASR using SAS EP. As a result, it seems that using the SAS/ACCESS to Hadoop (Hive) engine may be more efficient.

The example below shows a table list from both the Impala Shell and Hive prompts. Both lists show the same number of tables.

Table list from Impala shell prompt:

[sascdh01.xxxx.xxx.xxx:21000] > show tables;

Query: show tables

+---------------------+

| name |

+---------------------+

| active_customers |

| cars |

| cars_impala |

| cust_hv |

| customers |

| customers_gold |

| order_fact_hive |

| order_fact_impala |

| order_fact_impala_s |

| stocks |

+---------------------+

Fetched 10 row(s) in 0.01s

[sascdh01.xxx.xxx.xxx:21000] >

Table list from Hive prompt:

hive> show tables;

OK

active_customers

cars

cars_impala

cust_hv

customers

customers_gold

order_fact_hive

order_fact_impala

order_fact_impala_s

stocks

Time taken: 0.704 seconds, Fetched: 10 row(s)

hive>

Sample Code to load an Impala table into LASR using Impala engine.

OPTION SET=GRIDHOST="sasserver01";

OPTION SET=GRIDINSTALLLOC="/opt/sas/TKGrid_2.9/CDH520_REP/TKGrid_REP";

OPTION SET=GRIDRSHCOMMAND="/usr/bin/ssh";

libname HDFS IMPALA server="sascdh01.xxxx.xxx.com" user=hadoop port=21050 database=default ;

libname lasr_ep sasiola port=54321 tag='HDFS';

* Drop existing table;

%vdb_dt(lasr_ep.order_fact_impala_s);

* Load to LASR ;

proc lasr add port=54321 data=HDFS.order_fact_impala_s ;

run;

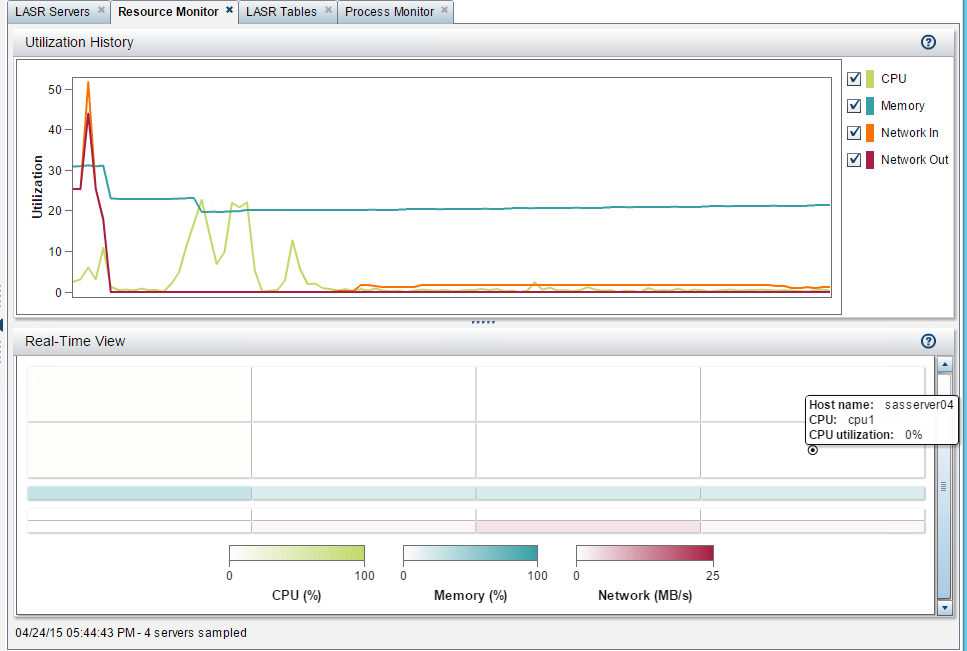

Resource utilization history while loading an Impala table into LASR using an Impala engine

While the data load is running using an Impala engine, it has been noticed that the LASR RootNode is performing major activities with Network-In and Network-out traffic. The LASR WorkerNode is not so busy and is just engaged in network-in traffic.

Sample code to load an Impala table into LASR using a Hadoop (Hive) engine

OPTION SET=GRIDHOST="sasserver01";

OPTION SET=GRIDINSTALLLOC="/opt/sas/TKGrid_2.9/CDH520_REP/TKGrid_REP";

OPTION SET=GRIDRSHCOMMAND="/usr/bin/ssh";

OPTION SET=SAS_HADOOP_JAR_PATH="/opt/sas/thirdparty/Hadoop_Jars/CDH520";

OPTION SET=SAS_HADOOP_CONFIG_PATH="/opt/sas/thirdparty/Hadoop_Config/CDH520";

libname HDFS hadoop user=hadoop server="sascdh01.xxxx.xxx.com" port=10000 SUBPROTOCOL=hive2 ;

libname lasr_ep sasiola port=54321 tag='HDFS';

* Drop existing table;

%vdb_dt(lasr_ep.order_fact_impala);

* Load to LASR ;

proc lasr add port=54321 data=HDFS.order_fact_impala ;

run;

Resource utilization history while loading an Impala table into LASR using a Hadoop (Hive) engine

While the data load is running using a Hadoop (Hive) engine, it has been noticed that the LASR WorkerNodes are engaged in most of the activity. The LASR RootNode is minimally used as data is passing directly from DataNodes to LASR WorkerNodes. The Network-in traffic on LASR WorkerNodes indicates parallel data load from the Hadoop cluster through SAS EP.

Summary

The most efficient way to load Impala table into LASR is using SAS/ACCESS to Hadoop (Hive) engine in conjunction with SAS Embedded Process configured at the Hadoop cluster.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

This is an AWESOME article! Thanks for posting this!!!

Don't miss out on SAS Innovate - Register now for the FREE Livestream!

Can't make it to Vegas? No problem! Watch our general sessions LIVE or on-demand starting April 17th. Hear from SAS execs, best-selling author Adam Grant, Hot Ones host Sean Evans, top tech journalist Kara Swisher, AI expert Cassie Kozyrkov, and the mind-blowing dance crew iLuminate! Plus, get access to over 20 breakout sessions.

Free course: Data Literacy Essentials

Data Literacy is for all, even absolute beginners. Jump on board with this free e-learning and boost your career prospects.