- Home

- /

- SAS Communities Library

- /

- Event Stream Processing and HPA on Hadoop playing together

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Event Stream Processing and HPA on Hadoop playing together

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

After a deployment of SAS High Performance Analytics (HPA) Server with In-Database Technologies and LASR on top of a large Hadoop cluster, your customer might want to capture IoT’s real time events and store them in Hadoop, in order to provide live reports and real time analytics, but also for further offline data cleaning process and HP Statistics.

In addition of its own powerful processing capabilities for real time events, SAS Event Stream Processing is a perfect fit for such use case.

- ESP provides an HDFS adapter with the capability, for the subscriber client, to output multiple HDFS files using periodicity or “Maximum File size” parameters.

- ESP can send the events to a SASHDAT table or even directly into LASR, strongly reducing the time required to provide updated VA/VS reports.

This blog covers Architecture key considerations on such "use cases" and also implementation guidelines.

Note: SAS HPA, LASR and VA/VS are not mandatory for the ESP-Hadoop integration. However, combining these products is a perfect mix for IoT/Big Data contexts. Each component can work in synergy with the others for the greatest benefit of the customer.

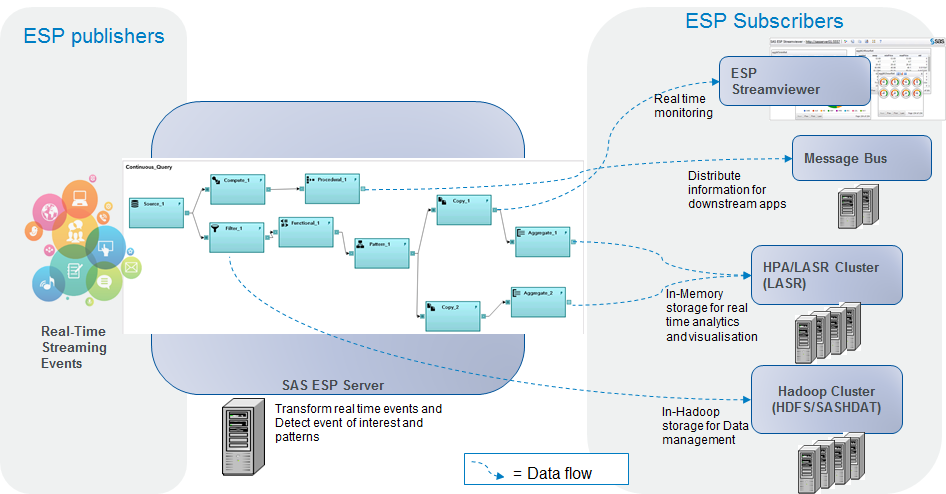

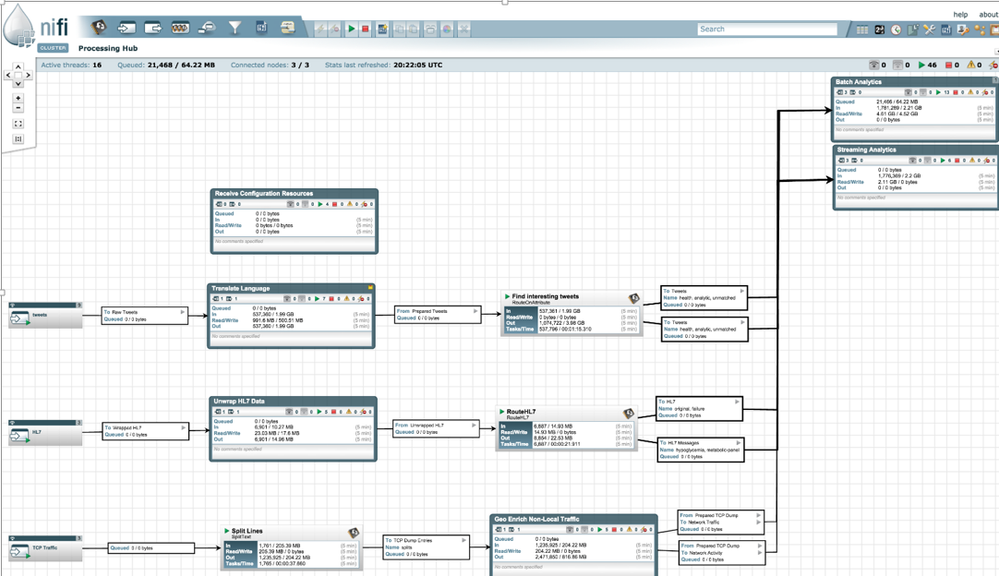

There’s nothing like an overview diagram

The real time events can be processed at high rates in ESP and are filtered out, scanned by ESP sophisticated rules to detect any required pattern.

We can have various type of subscribers: Event Streamviewer application for real time dashboards, Message buses to propagate key information to downstream applications, LASR cluster for near-real time reports, HDFS for further computation in Hadoop, etc…

Once the "events of interest" have been captured by ESP, they can be stored (with the associated information) either in Hadoop for further analysis, data management operations or in LASR for near real time analytics or visualization.

Key considerations for ESP and Hadoop

- The ESP engine can easily process hundreds of thousands events per second. Of course your Namenode will very likely not be able to distribute the rows across HDFS blocks at such rate. So it is important to avoid introducing the HDFS write operations as a bottleneck. One way to avoid the bottleneck is to build separate independent flows and filter your events. You might want to consider the usage of a Message Bus intermediation between ESP and Hadoop as a way to “bufferize” your events before injection in HDFS (the HDFS adapter supports the transport option)

- ESP should not be used as an “ETL tool” for Hadoop, but rather as a way to store in HDFS "value added data" resulting. For example, from a Pattern window where “events of interest” are filtered out. Keep in mind that Hadoop and LASR loading are done in serial mode by the ESP adapters.

- All events subscribed by the HDFS adapter will be inserted as new rows in the Hadoop files. (Keep in mind that Hadoop is NOT fitted for UPDATE operations). Each event, processed by the window you are subscribing to, will be dumped in HDFS. The ESP adapter can create multiple HDFS files that can be seen as a single table via Hive or using PROC HDMD.

An example

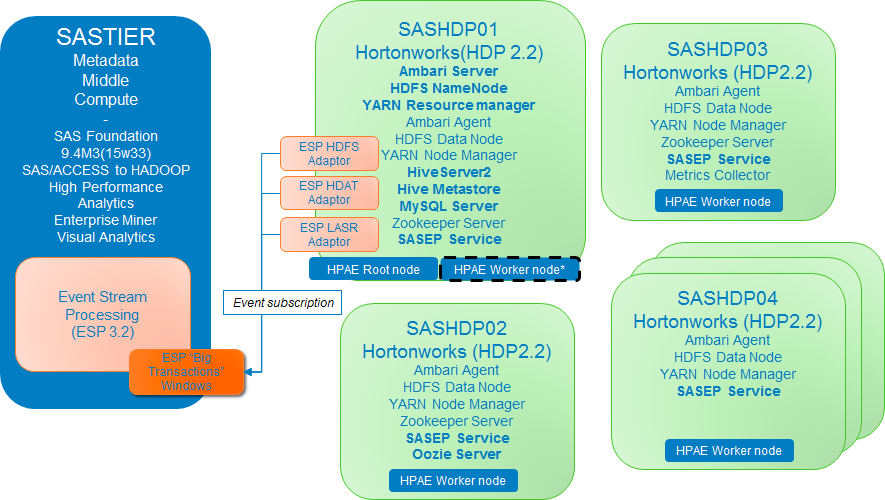

BABAR is our "SAS HPA 9.4 Server on Hadoop" example collection. We will use this collection of machines to describe how the ESP components could be deployed in the existing HPA on Hadoop Architecture. The ESP components are represented in orange.

The Demo

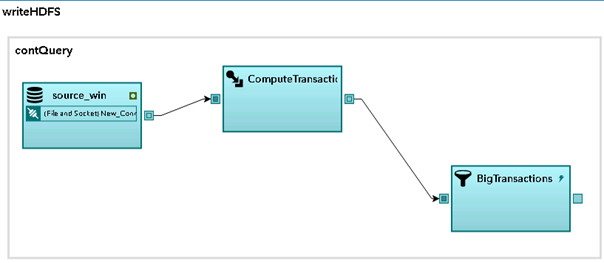

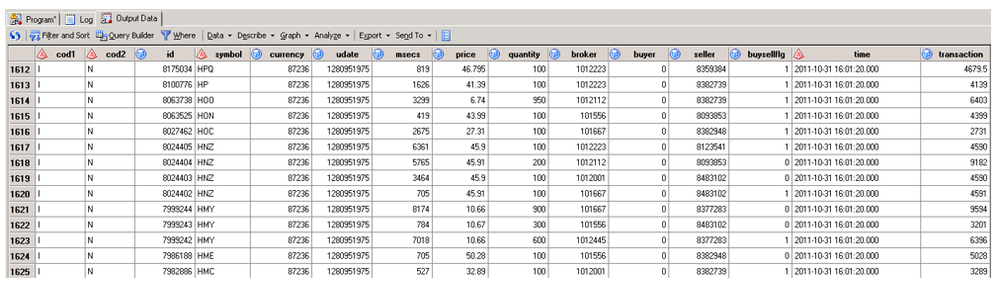

A simplistic ESP model, called “writeHDFS” has been created in the Babar collection to demonstrate how ESP windows can be subscribed from the Hadoop namenode in order to store events in HDFS. The model collects trading events and filters transactions above a certain amount. The “big” transactions are stored in HDFS for further analysis.

The first thing is to start the ESP server with our model, for example:

There is nothing "Hadoop specific" in the model, it is simply collecting events and performs a real time computation and filter for the events streaming in. Nevertheless, we will have an adaptor running on a remote machine subscribing to this ESP window and storing the collected outputs in HDFS files. In our example the ESP HDFS Adapter will run on the Hadoop namenode (But it could run on the ESP server or on any Hadoop “EDGE” node having write access to the HDFS layer of our cluster).

First it is necessary, on the machine running the HDFS adapter, to set the DFESP_HDFS_JARS environment variable with the required Hadoop jar files:

Actually this is the trickiest part of the setup: you will need to adjust the name of the required jars files to your Hadoop distribution and release. The Hadoop jars configuration above was tested in our Babar test collection (Hortonworks HDP 2.2), depending on your own Hadoop environment, you will have to find the appropriate location of those jars and directory. Once the environment has been set for the user running the ESP Adapter, we can start the HDFS adapter with the command below:

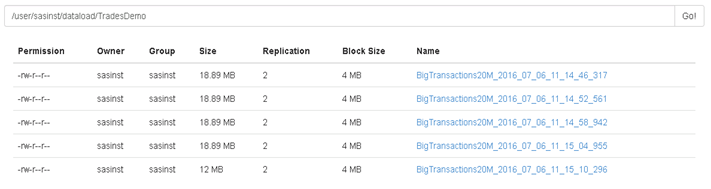

To see files created in HDFS, open the NameNode UI and browse the Hadoop filesystem. HDFS files of 20 MB each are created in real time.

Finally you can use the HDMD engine from SAS to see the collection of HDFS files as a single SAS table. For example, the program below will create the necessary metadata in HDFS to do so :

The "big Transactions table" can now be opened any time with the latest big transactions.

Note: You could also do the equivalent in HIVE, referencing the files as a HIVE table (see "LOAD" command).

What’s coming Next

In this article we have illustrated a way to interface ESP with HDFS.

However in the new version of ESP, new Hadoop integration perspectives will be offered with Apache NiFi. Apache NiFi is a new dataflow framework for Hadoop data. More generally it is a system to automate the flows of data between various systems via “Processors”: HDFS/HBASE, SerDe files, Events Hubs, JMS, NoSQL databases (Cassandra, Mongo), Kafka, SFTP, S3 storage, Twitter feeds, etc...

A special .nar file (zipped container file that holds multiple JPEG files and a .xml metadata file) will be provided as part of ESP 4.1. Once deployed in your NiFi setup, you will be able to design your Apache NiFi flows for integration using "ListenESP" and "PutESP" processors. For more information about SAS ESP and NiFi also check out the Mark Lochbihler’s Hortonworks blog on SAS ESP and "Hortonworks data flow" (NiFi based) integration : Moving Streaming Analytics out of the Data center

Don't miss out on SAS Innovate - Register now for the FREE Livestream!

Can't make it to Vegas? No problem! Watch our general sessions LIVE or on-demand starting April 17th. Hear from SAS execs, best-selling author Adam Grant, Hot Ones host Sean Evans, top tech journalist Kara Swisher, AI expert Cassie Kozyrkov, and the mind-blowing dance crew iLuminate! Plus, get access to over 20 breakout sessions.

Free course: Data Literacy Essentials

Data Literacy is for all, even absolute beginners. Jump on board with this free e-learning and boost your career prospects.

Get Started

- Find more articles tagged with:

- esp

- Event stream processing

- hadoop

- High-Performance Analytics

- NiFi