- Home

- /

- SAS Communities Library

- /

- Evaluating the Performance of Retrieval Augmented Generation Pipelines...

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Evaluating the Performance of Retrieval Augmented Generation Pipelines using the Ragas Framework

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The purpose of this post is to learn how to use the metrics provided by the Ragas framework to improve the reliability and value of the responses generated by a large language model (LLM) when used with retrieval augmented generation (RAG) to enhance the context. The fundamental idea of RAG is to select useful document chunks to add to the LLM context to help the LLM better answer user queries related to those document chunks. The challenge comes in evaluating the effectiveness of this whole process. We want to make sure that the LLM generates useful answers, but to do this we need to make sure that the document chunks added to the context are relevant to the user query, and that the answer generated by the LLM actually uses information from this context.

This post will focus on how metrics from the open-source Ragas framework can be used to judge various aspects of the RAG pipeline. These metrics are used in a new tool designed to make setting up RAG pipelines easy for users, SAS Retrieval Agent Manager. A subsequent post in this series will look at how we build a RAG pipeline using SAS Retrieval Agent Manager.

At the most basic level the goal of evaluation is to determine if the RAG system is returning the “correct” answer to user queries, but unlike traditional machine learning approaches, the notion of “correct” in this setting cannot be easily expressed in terms of a simple error measure like misclassification or average squared error. There are generally multiple “correct” (or useful and relevant) responses to most user queries, and we are unlikely to be able to create a labeled dataset with sample questions and all possible “correct” answers to these questions. Of course the existence of such a dataset would be helpful in evaluating RAG systems, but even if we make something like this, we are unlikely to be able to cover the diverse kinds of questions that users could ask the RAG system (and if we could truly map out most possible questions users would ask and the correct answers to those questions, we might not even need a LLM-based RAG system, we could just have a searchable bank of questions and the correct answers).

We will use LLMs themselves to create sample data and evaluate the RAG system, allowing us to build meaningful evaluations without any manually labeled data. Essentially this means that LLMs will be used to generate various kinds of questions and answers (it will work slightly differently for the different metrics we discuss), and different LLMs will evaluate the question/answer pairs to create metrics. This is not guaranteed to be reliable, but it does scale well since the LLMs can generate and evaluate many question/answer pairs without any manual curation or data creation. In some ways this is the fundamental tradeoff in evaluating the outputs of LLMs:

If we are building an LLM system that will be useful in providing knowledge to human beings, we are unlikely to have a curated dataset containing verified versions of that useful knowledge, since then we wouldn’t need the LLM system.

Thus, when you can easily evaluate and verify the LLM outputs, you probably don’t need the LLM at all, and when you do need the LLM, you probably can’t easily evaluate and verify the outputs. This means we need to find clever ways to evaluate LLMs and RAG systems, and using LLMs to judge the output of other LLMs provides a way to automatically create useful evaluations on large document collections at scale. This approach is fundamentally less reliable than having the evaluations painstakingly created by thoughtful human beings, but it is much easier to implement and can still provide a metric that is “directionally” accurate, in that the metric increases when the system is more useful and decreases when the system is less useful.

It is important to keep in mind that a metric like this can be useful in evaluating how to improve the RAG system, even if we don’t fully believe that the metric captures what we are hoping it captures (even if we are skeptical that the LLM did a good job generating evaluation test cases). We can remain doubtful about the LLM generated evaluations while still using them to improve the RAG pipeline (although we might not want to use them to make claims about the performance).

SAS Retrieval Agent Manager will calculate the Ragas metrics for RAG pipeline configurations, allowing users to compare different configurations (different settings and choices for the RAG pipeline) based on their scores:

- Answer Relevance - does the generated response answer the question?

- Context Precision – does the most relevant retrieved content for answering the question get included in the context?

- Context Recall – does the retrieved context contain the necessary information to answer the question?

- Faithfulness – does the generated answer actually use the information from the context?

The plain English descriptions of these metrics give an idea of how they should be used and interpreted, but as we will see in this post, these metrics are not quite as simple as we’d like them to be (for easy interpretation). The first thing to note is that each of these metrics requires the use of a LLM to calculate, so when we talk about “questions”, “answers”, and even a “ground truth”, we are really talking about LLM generated content. Connected to this is the concept that some of these metrics require a “ground truth” or reference value for the answer, but we might not have a nicely curated bank of relevant questions with their true answers for our RAG pipeline, so even the “ground truth” will be generated by a LLM. This is a good time to emphasize that we should not use the same LLM to both generate answers for the RAG pipeline to create the evaluation data and calculate metrics. This would be like asking students to grade their own exams without providing an answer key. Even aside from any conflicts of interest we can’t expect students to reliably point out errors in their own work.

Before we get into the details of how these metrics are calculated, it is worth reviewing the basic concepts of Precision and Recall as applied to information retrieval tasks. When retrieving information, we prefer to use precision and recall instead of simpler metrics like misclassification and accuracy because they make clear the distinction between false positives and false negatives. In the context of information retrieval, a false positive would be when we retrieve the wrong information, while a false positive would be when we fail to retrieve the right information. These have different consequences in different settings and thus it’s helpful to evaluate both precision and recall for information retrieval systems.

- Precision is the percentage of correct information retrieved out of all the retrieved information

- True Positives / (True Positives + False Positives)

- Recall is the percentage of correct information retrieved out of all the correct information.

- True Positives / (True Positives + False Negatives)

A high precision system will only retrieve useful information (it will avoid retrieving the wrong information), but it may not retrieve much information at all. In theory if we retrieve a single correct piece of information and nothing else, we will have a value of 1 for precision. A high recall system will retrieve as much of the relevant information as possible, but it could retrieve irrelevant information as well. In theory if we retrieve every single piece of information available, we will have a value of 1 for recall. Neither of these extreme examples are useful, so we are generally looking for both high precision and high recall, with the understanding that they are usually in tension (this wouldn’t be the case if we had a perfect retrieval system, but that is unrealistic).

Select any image to see a larger version.

Mobile users: To view the images, select the "Full" version at the bottom of the page.

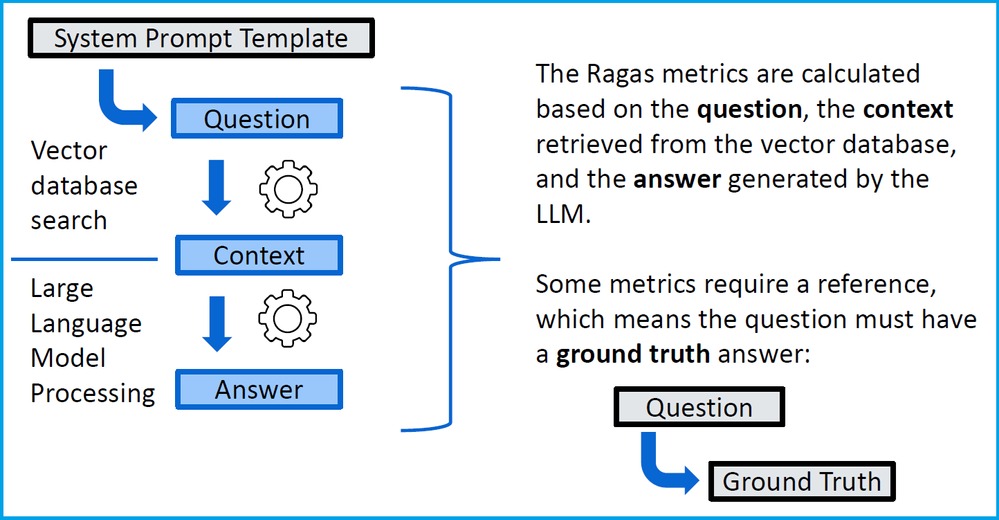

The definitions of the Ragas metrics will depend on some key textual components from the RAG system:

- The question is a stand-in for user generated questions; the whole point of the RAG system is to provide useful responses to these questions. We can create our own user-defined test questions for evaluating the system, or we can let an LLM automatically generate a collection of sample questions based on the information in the vector database.

- The context is the collection of vectorized document chunks retrieved based on the user question. This context is used to help the LLM answer the question accurately and with reference to verified information. Fundamentally this is text that the LLM will process before trying to answer the user question.

- The answer is the response from the LLM based on the user question. The whole goal of these metrics is to ensure that the answer created by the LLM is a useful response to the question.

- As mentioned earlier in this post, precision and recall require some kind of known target value (a reference value), so we think of the ground truth as “the correct answer” (more realistically “a correct answer”) to the question. For user-defined questions we can provide a desired ground truth, but to perform evaluations at scale we will use an LLM to create question – ground truth pairs based on the information in the vector database.

- This is done by extracting “ground truth claims” from the document chunks in the vector database (this is just information from the documents), and then reverse-engineering questions that lead to the ground truth claims as the answer. These question-and-answer pairs are not guaranteed to be accurate, but we are not relying on the LLM to correctly answer questions, we are just relying on it to generate questions based on a correct answer.

Now that we have the building blocks in place for calculating the Ragas metrics let’s dive into the details of how the four metrics are calculated:

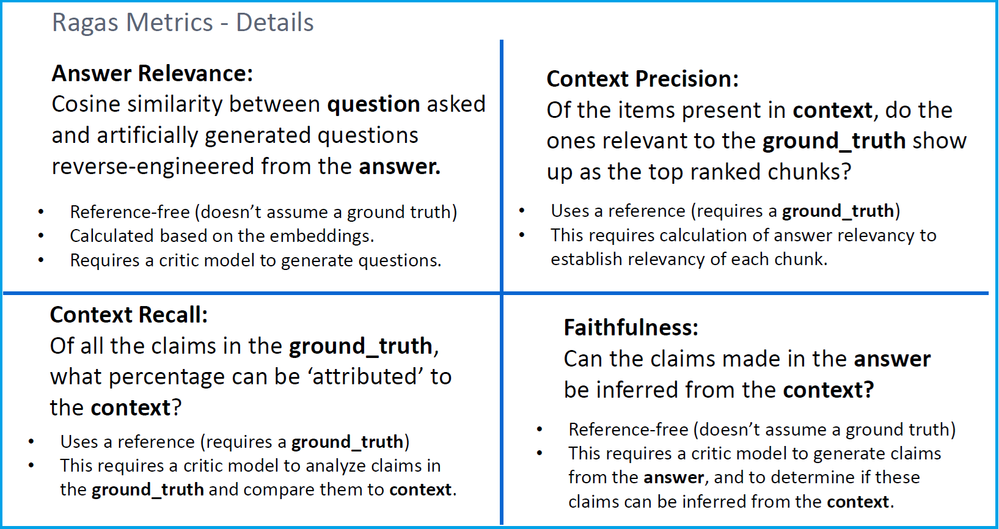

- Answer Relevance is calculated without a reference or ground truth, so we start with question-and-answer pair (the question could be user-defined or automatically generated by a LLM, and the answer is the LLM response to the question based on the retrieved context).

- A LLM is used to create artificially generated questions that lead to the answer (essentially a prompt is used to generate fake questions based on the answer). The cosine similarity (a good distance metric for comparing vector embeddings) is then calculated between the embeddings for the original question and the artificially reverse-engineered versions of the question (which came from the answer).

- The idea here is that we want to make sure that the LLM generated answer meaningfully responds to the user’s prompt (the question). If the answer is related to the original question, then the reverse engineered questions based on the answer should be similar in content to the original question. Ideally, we’d have a single metric to compare the answer to the question, but there’s no reason to expect them to be similar, so instead we must compare the question to fake versions of the question created based on the answer.

- Answer Relevance values will be between 0 and 1, with larger values indicating that the answer addresses the question and smaller values indicating that the answer may not have anything to do with the question.

- More specifically, values closer to 1 indicate that the artificially generated questions reverse-engineered from the answer are similar to the original question, while values closer to 0 indicate that these fake questions are not similar to the original question.

- Context Precision requires a ground truth reference, so we start with a question and the ground truth. The RAG system is used to retrieve relevant context based on the question, and these context chunks are compared to the ground truth. Answer relevance is calculated between the retrieved chunks and the ground truth, and the retrieved chunks are ranked based on this answer relevance. This ranking is compared to the original ranking of the retrieved chunks by the RAG system to evaluate if the RAG system is retrieving the correct chunks.

- Precision comes into this calculation because the precision is calculated at each rank (comparing the ranking of the retrieved chunks to the relevance-ranked chunks), and this precision is averaged across all ranks.

- The idea here is that the whole RAG system will not be able to generate accurate answers if it fails to retrieve useful context. If we ask a specific question like “what is the diameter of part A521?”, we can’t expect the system to generate a correct answer unless the diameter of part A521 actually appears somewhere in the retrieved context. There is no point in changing the prompt or the large language model to improve performance of the system if correct answers are not included in the context. Instead, low values of context precision indicate that we need to reconsider how we retrieve relevance chunks for context.

- Context Precision values will be between 0 and 1, with larger values indicating that RAG system retrieves chunks that are useful at leading to ground truth answer, and smaller values indicating that the RAG system is retrieving chunks that will not answer the question correctly.

- More specifically, values closer to 1 indicate that the chunk-ranking performed by the RAG system is close to the chunk-ranking calculated by judging the relevance of the retrieved chunks to the ground truth answer.

- Context Recall requires a ground truth reference just like Context Precision and is also used to evaluate the retrieved context and how it is used. In this case the goal is to ensure not that we highly rank relevant results, but that we don’t miss any relevant document chunks in the retrieval process. This is done by analyzing the claims in the ground truth (a critic LLM breaks the ground truth down into distinct claims) and then comparing the claims to the retrieved context and identifying if the claim in the ground truth can be attributed to the context.

- Recall comes into this calculation because we want to ensure that all the claims in the ground truth are supported by the retrieved context, so we calculate recall identifying True Positives as statements in the ground truth that have support from the context, and False Positives as statements in the ground truth with no support from the context. This is calculated for all extracted claims from the ground truth (and averaged across all question-ground truth pairs in the evaluation data).

- Just like with Context Precision we use a LLM to ‘attribute’ the claims to the retrieved context. This involves a calculation of answer relevancy between the extracted claim and the retrieved context.

- The idea here is that the whole RAG system will not be able to generate reliable and trustworthy answers if the true answer to a question is not available in the context. The model may have information stored in its weights from pre-training on some large internet corpus, but the whole point of a RAG system is that we don’t want to rely on pre-trained weights as a source of knowledge. We want to instead use the RAG system to retrieve information that can be used to answer questions, and we want those answered questions to be based on the retrieved knowledge.

- Recall comes into this calculation because we want to ensure that all the claims in the ground truth are supported by the retrieved context, so we calculate recall identifying True Positives as statements in the ground truth that have support from the context, and False Positives as statements in the ground truth with no support from the context. This is calculated for all extracted claims from the ground truth (and averaged across all question-ground truth pairs in the evaluation data).

- Faithfulness is calculated without a reference or a ground truth, so we just start with a question and the LLM generated answer to that question. Of course, we also retrieve context based on this question, which is compared to the answer to determine if the answer is supported by the context.

- A LLM is used to extract individual claims from the generated answer, and then each claim is compared to the context to determine if it is supported in the context. This is like what we do with Context Recall, but instead of analyzing “true” claims in the ground truth, we are analyzing generated claims in the answer. Of course, we require a critic LLM to extract the claims from the answer and then an LLM again to attribute the claims to the retrieved context (just like with Context Recall).

- The idea here is that even if we retrieve all the correct context and rank it appropriately (high values of Context Precision and Recall), we don’t have an effective system unless the LLM actually uses the information from the context to generate an answer. Answer Relevance ensures that the answer responds to the question, while Faithfulness ensures that the answer uses information from the context to respond to the question.

- Faithfulness values will be between 0 and 1, since we calculate it by dividing the number of supported claims in the answer by the total number of claims in the answer. Larger values indicate that most of the claims in the answer come from the retrieved context, while smaller values may indicate that the model is pulling information from its stored weights (which could easily be hallucinations).

Each of these metrics evaluates the performance of the RAG pipeline in a slightly different way, emphasizing different aspects of the process (retrieving good context, generating reliable answers, responding to user queries), but for all of them a value closer to 1 means a better system and a value closer to 0 means a worse system. SAS Retrieval Agent Manager provides a Ragas score for each configuration (a collection of settings and choices for the RAG pipeline), which is calculated as the harmonic mean of the four individual metrics discussed in this post. This will also always be a number between 0 and 1 (assuming none of the scores are exactly 0) and provides an overall guide for the performance of the SAS RAM configuration.

The four metrics (and their harmonic mean) presented in this post are all useful signals for the performance of a RAG pipeline, but they also are all calculated at least partially using LLMs. Traditional machine learning and deep learning metrics are always rigorously calculated formulas generally based on labeled data. These metrics all involve the use of an LLM to evaluate claims or reverse-engineer questions. This is an important point to remember for a couple of reasons:

- The LLM used to evaluate claims (Critic LLM) must be different from the LLM that answers questions (when performing evaluations it is the Eval LLM), and if doing automated evaluations, a different LLM should be used to automatically generate evaluation test cases (this is the Data generation LLM).

- The metrics can be interpreted as a good signal for how the model performs (higher values are better) but should not be interpreted as rigorous guarantees for how the model will perform in deployment.

References:

- SAS Retrieval Agent Manager User’s Guide

- Open-source Ragas Library

- SAS Post on RAG

- SAS Post discussing Precision and Recall (in a time series anomaly detection context)

Find more articles from SAS Global Enablement and Learning here.

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.