- Home

- /

- Programming

- /

- Programming

- /

- Web scraping a CDC.gov site using SAS

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hello Community,

I need to know what I am doing wrong with this code:

interested in this page:

https://wwwn.cdc.gov/nndss/conditions/search/

Two columns of interest:

Name and Notifiable To

current code:

filename CDC url "https://wwwn.cdc.gov/nndss/conditions/search/";

data rep;

infile CDC length=len lrecl=32767;

input line $varying32767. len dt $varying32767. len;

if find(line,"nndss/conditions/") then do;

list=scan(line,4,'/');

output;

end;

run;

filename CDC clear;

Thank you

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

To sum up: you want to use SAS to pull in this web page hosted by the CDC, which contains a large table (among other items), and save just a couple of columns from that table.

I still maintain (as I've said in other similar threads) -- this information probably exists somewhere else in a more consumable form, probably from the CDC -- a US government organization that serves up a tremendous amount of data to the public and to other agencies. It might be worth reaching out to them.

Update: this thread inspired me to write a longer article about web scraping in general: How to scrape data from a web page using SAS.

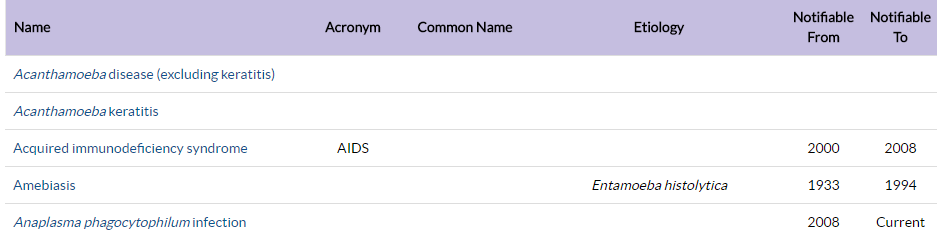

The web table looks like this:

Here's a brute force start at parsing it. You'll have additional cleanup to do.

Note that this relies on the web page current scheme for line breaks and structure -- it's fragile in that sense.

/* Get all of the nonblank lines */

filename CDC url "https://wwwn.cdc.gov/nndss/conditions/search/";

data rep;

infile CDC length=len lrecl=32767;

input line $varying32767. len;

line = strip(line);

if len>0;

run;

filename CDC clear;

/* Parse the lines and keep just condition names */

/* When a condition code is found, grab the line following (full name of condition) */

/* and the 8th line following (Notification To date) */

/* Relies on this page's exact layout and line break scheme */

data parsed (keep=condition_code condition_full note_to);

length condition_code $ 40 condition_full $ 60;

set rep;

if find(line,"/nndss/conditions/") then do;

condition_code=scan(line,4,'/');

pickup= _n_+1 ;

pickup2 = _n_+8;

set rep (rename=(line=condition_full)) point=pickup;

set rep (rename=(line=note_to)) point=pickup2;

output;

end;

run;

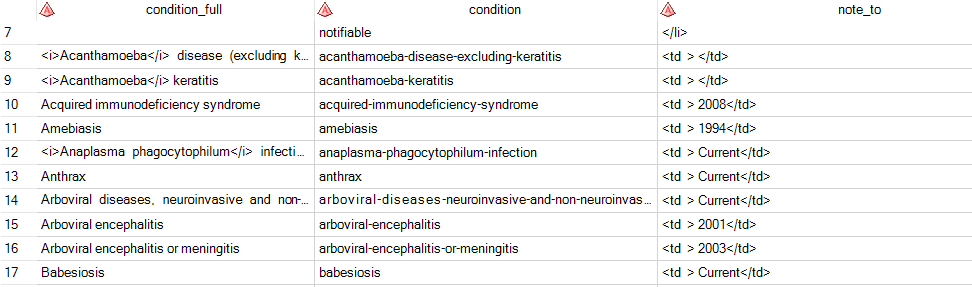

Sample result -- HTML tags and other noise are yours to clean up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

To sum up: you want to use SAS to pull in this web page hosted by the CDC, which contains a large table (among other items), and save just a couple of columns from that table.

I still maintain (as I've said in other similar threads) -- this information probably exists somewhere else in a more consumable form, probably from the CDC -- a US government organization that serves up a tremendous amount of data to the public and to other agencies. It might be worth reaching out to them.

Update: this thread inspired me to write a longer article about web scraping in general: How to scrape data from a web page using SAS.

The web table looks like this:

Here's a brute force start at parsing it. You'll have additional cleanup to do.

Note that this relies on the web page current scheme for line breaks and structure -- it's fragile in that sense.

/* Get all of the nonblank lines */

filename CDC url "https://wwwn.cdc.gov/nndss/conditions/search/";

data rep;

infile CDC length=len lrecl=32767;

input line $varying32767. len;

line = strip(line);

if len>0;

run;

filename CDC clear;

/* Parse the lines and keep just condition names */

/* When a condition code is found, grab the line following (full name of condition) */

/* and the 8th line following (Notification To date) */

/* Relies on this page's exact layout and line break scheme */

data parsed (keep=condition_code condition_full note_to);

length condition_code $ 40 condition_full $ 60;

set rep;

if find(line,"/nndss/conditions/") then do;

condition_code=scan(line,4,'/');

pickup= _n_+1 ;

pickup2 = _n_+8;

set rep (rename=(line=condition_full)) point=pickup;

set rep (rename=(line=note_to)) point=pickup2;

output;

end;

run;

Sample result -- HTML tags and other noise are yours to clean up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, I'll use this until I find a stable source.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

To add on to @ChrisHemedinger comment, the CDC maintains there own data page here:

There's also an email contact.

Just a note that if you never ask, the data will never change format. We often change how we provide data solely based on requests we receive...if people scrape we don't know and can't make decisions on what to release publicly.

Note that I am not affiliated with the CDC in any manner.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thank you

Don't miss out on SAS Innovate - Register now for the FREE Livestream!

Can't make it to Vegas? No problem! Watch our general sessions LIVE or on-demand starting April 17th. Hear from SAS execs, best-selling author Adam Grant, Hot Ones host Sean Evans, top tech journalist Kara Swisher, AI expert Cassie Kozyrkov, and the mind-blowing dance crew iLuminate! Plus, get access to over 20 breakout sessions.

Learn how use the CAT functions in SAS to join values from multiple variables into a single value.

Find more tutorials on the SAS Users YouTube channel.

Click image to register for webinar

Click image to register for webinar

Classroom Training Available!

Select SAS Training centers are offering in-person courses. View upcoming courses for: