- Home

- /

- Programming

- /

- Programming

- /

- Connection between SAS 9.4 and Cloudera Hadoop thru proc hadoop and li...

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hey - In response to the Proc Hadoop question:

The issue here is that the file that you are attempting to copy is not being found. This is the relevant error line from the java trace back: ERROR: java.io.FileNotFoundException: File /home/cloudera/Desktop/samplenew.txt does not exist

Based on the path that you are trying to copy, I'm guessing that the file is located on the server's local file system... When you use Proc Hadoop, it only connects to the Hadoop File System (HDFS) and therefore can only access files that are within HDFS.

So a couple suggestions, if you want to use that file, copy it to hdfs using the VM command line and the "hadoop fs -put" or "hadoop fs -copyFromLocal" (it may be 'hdfs dfs' instead of 'hadoop fs' depending on your version of Hadoop). Otherwise, I'd suggest you creating a local file on your Windows box and trying a proc hadoop copyfromlocal to the directory that you create.

Based on your code comment, to put a file on HDFS you'd want to use copyfromlocal. Copyfromlocal will copy a file from your Windows local file system to HDFS on the cloudera VM, while copytolocal will copy a file from HDFS on the cloudera VM to your Windows local file system

Below I have revised your proc hadoop code and included an example of both types of copy statements. The code below would in theory create '/user/sas/newdir' on your Cloudera VM's HDFS, it would then copy a file from your windows box to the Cloudera VM's HDFS, and finally copy it back to your Windows local file system. You would have to correct the X'ed password, and ensure that 'D:\hadoop\sample.txt' existed before running the code.

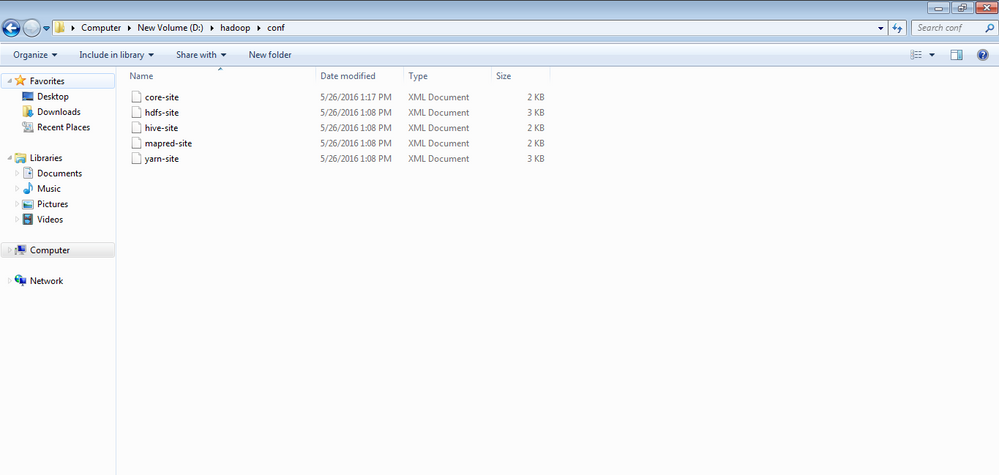

options set=SAS_HADOOP_CONFIG_PATH="D:\hadoop\conf";

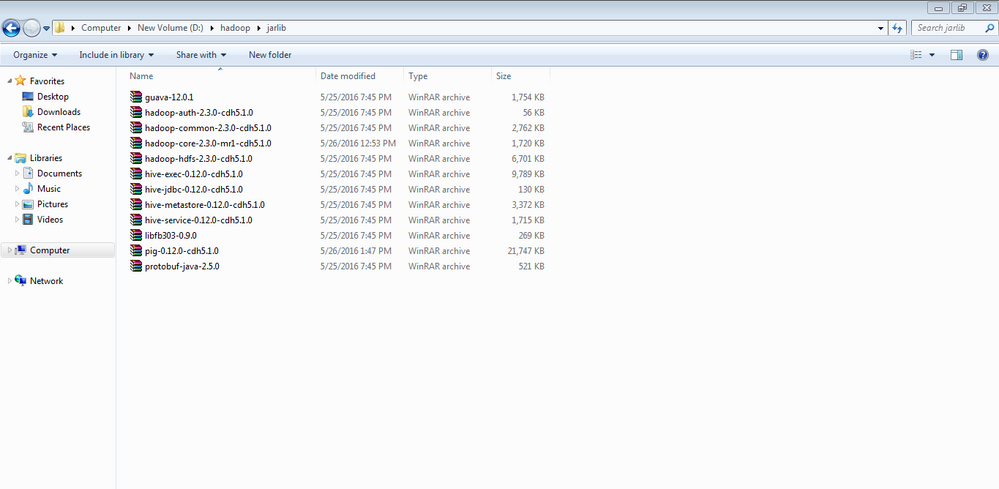

options set=SAS_HADOOP_JAR_PATH="D:\hadoop\jarlib";

proc hadoop username='cloudera' password=XXXXXXXXXX verbose;

hdfs mkdir='/user/sas/newdir';

hdfs copyfromlocal='D:\hadoop\sample.txt' out='/user/sas/samplenew.txt' ;

hdfs copytolocal='/user/sas/samplenew.txt' out='D:\hadoop\sampletest.txt' ;

run;

I do not know much about libname statements, and will not be able to address those errors - but I do hope this gets you running with Proc Hadoop.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I face the same error no matter what statement I'm using:

proc sql;

connect to hadoop

(server="myserver" subprotocol=hive2 user=&u_name. pwd=&u_pass.);

select * from connection to hadoop

(select var1, count(*) as cnt

from default.mytable

group by var1

);

disconnect from hadoop;

quit;

OR

libname mylib hadoop subprotocol=hive2 port=10000 host="myhost" schema=default user=&u_name. pw=&u_pass.;

proc freq data=default.mytable;

tables var1;

run;

When I´m trying to access 'bigger' tables using those statements on hadoop via SAS STUDIO I get the same Error:

ERROR: Prepare error: Error while processing statement: FAILED: Execution Error, return code 1 from

org.apache.hadoop.hive.ql.exec.mr.MapRedTask

It seems to have sth. to do with the size. If I e.g. do a data step to create a sas table in work out of hive it results in the same error. If i then split the data set using the (obs=100000)-statement it works just fine.

Any update or work arround on this would be really helpful. It would be great to manipulate data via SAS directly on hive, no matter how big the data is.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Is this fixed ? I have similar situation

libname hdp hadoop server=dqas1100a port=10000 schema=default subprotocol=hive2;

ERROR: java.util.concurrent.ExecutionException: java.lang.NoClassDefFoundError: sun.util.calendar.ZoneInfoFile (initialization

failure)

ERROR: Error trying to establish connection.

ERROR: Error in the LIBNAME statement.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

for us the following solution worked.

Add to your libname statement user and password from an account that has the rights to write data to your clusters /user/<username> folder. In our case that was the HUE Username and Password. Our HUE users had a directory within /user on the cluster and the rights to write data to it. So your statement should look sth. like:

/*--- your statement WITH PW ---*/

libname hdp hadoop subprotocol=hive2

server=dqas1100a

port=10000

schema=default

user = <yourusername>

pw = <yourpassword>

;

/* --- our statement that works now, for comparison ---*/

libname myhive hadoop subprotocol=hive2

port=10000

host= "<our_hostname>"

schema= "default"

user = <my_HUE_Username>

pw = <my_HUE_Password>

;

Hope that works!

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

We have kerberos enabled.. So it doesn't fix , thanks for sharing your thoughts though

ERROR: The Hive Kerberos principal string is present in the hive-site.xml file in the SAS_HADOOP_JAR_PATH. The USER= and PASSWORD=

options cannot not be specified when the Hive Kerberos principal string is present.

ERROR: Error in the LIBNAME statement.

Don't miss out on SAS Innovate - Register now for the FREE Livestream!

Can't make it to Vegas? No problem! Watch our general sessions LIVE or on-demand starting April 17th. Hear from SAS execs, best-selling author Adam Grant, Hot Ones host Sean Evans, top tech journalist Kara Swisher, AI expert Cassie Kozyrkov, and the mind-blowing dance crew iLuminate! Plus, get access to over 20 breakout sessions.

Learn how use the CAT functions in SAS to join values from multiple variables into a single value.

Find more tutorials on the SAS Users YouTube channel.

Click image to register for webinar

Click image to register for webinar

Classroom Training Available!

Select SAS Training centers are offering in-person courses. View upcoming courses for: