- Home

- /

- Programming

- /

- Programming

- /

- Alternative to SAS Merge

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

You've started a really interesting discussion here and I'm spending unreasonable time with it because I'm actually also learning something.

I believe you're now at the point of "it depends".

If you don't want to go for an "in-place" approach using MODIFY then the one thing you want to concentrate on is to keep I/O to a minimum. This means you want to minimize read/write operations and make best use of your actual environment.

There are also a few data set option which you can set when creating a ds which can improve I/O.

How far you need to take things will depend on how big your current performance problem actually is (are some quick-wins sufficient or do you need to squeeze out the last drop?).

Both the UPDATE and MERGE statement fully recreate the master ds and as you have Updates and Inserts I would go for Merge.

If both the Master and Transaction ds are indexed then the BY statement could use the index and the ds wouldn't need to be physically sorted that way. That's what @Ksharp was talking about using UPDATE with an index.

I've done some testing with your volumes and the results don't support such an approach (the merge took around 6 minutes on my machine). So for merging have your data sets physically sorted in the way you refer to them in the By statement when merging (that took me 40 seconds in my environment which is more or less the time required to write the master ds).

Now: If you've got control how the master DS is sorted then just have it sorted ONCE (once off job). A Merge with by group processing maintains the sort order and you don't need to run a sort every single time.

On my laptop using the V9 engine with compression turned performance was as good or even better than most other approaches (the merge took around 40 seconds for a compressed DS, only the MODIFY with an index and uncompressed data was significantly faster with 20 seconds).

On my laptop the main time spent is for "write to disc" - initial creation of the master ds took already around 40 seconds.

Now these timings could be very different in your environment and your records and you will have to run some tests to come up with the right strategy (if required, the quick win will be to no more sort your master DS every single time).

Assuming that your master DS is quite huge even when compressed, I still would go for the SPDE engine to keep file size in a reasonable area. Using the SPDE engine could also be beneficial for data retrieval (eg. multithreading with indexes and where clauses) and I'm sure that also your OPS team prefers to have reasonable file sizes for backup and recovery processes.

If you're happy to have your master ds sorted the way you need it for a Merge with By group processing then using the hash approach as proposed by @Ksharp won't be faster as it still requires a read/write operation. Only consider the hash approach if your master ds is sorted differently AND you can be sure that you can fit the full transaction dataset always into memory.

Below a code version using MERGE which assumes that the Master DS is already sorted as expected by the By statement. This is basically your initial code without the sort. The merge took around 40 seconds in my environment.

I'm expecting your real data to have a much wider PDV and writing the full data could take up much more time. So here using the SPDE engine and/or a MODIFY statement or at least using the "fastest disk available" could make a big difference in end-to-end run-times.

The ds option "sortedby=date id" is not necessary for the merge BUT it can be beneficial to to have this attribute set on a ds for data retrieval as it can allow the compiler to eventually take a better execution path (especially for SQL queries, or where clauses with the SPDE engine).

There had been the suggestion to use the "PRESORTED" option with Proc Sort so that sorting only happens when it is required. For Proc Sort to determine if sorting is required it still would need to scan (read) the source ds. I'm not sure but eventually with attribute "sortedby=date id" set, Proc Sort wouldn't scan the ds anymore. If that's true then you could have in your code a Proc Sort for the Master ds - just make sure that you use option "PRESORTED" and set attibute "sortedby" on the output ds (out= in proc sort; with same ds name than in data=).

options fullstimer;

/* create directories in WORK */

/*data _null_;*/

/* Base_Dir="%sysfunc(pathname(work))";*/

/* rc1=dcreate('data', cats(Base_Dir,'\'));*/

/* rc2=dcreate('index', cats(Base_Dir,'\'));*/

/*run;*/

/* define libname using SPDE engine */

/* partsize of 4GB is for uncompressed. */

/* Try to end up with around 4 partitions per "Data folder" and choose Partsize accordingly */

/*libname spdetest spde ("%sysfunc(pathname(work))")*/

/* datapath=("%sysfunc(pathname(work))\data" "D:\temp\SPDE\data")*/

/* indexpath=("%sysfunc(pathname(work))\index")*/

/* partsize=4G*/

/* ;*/

libname spdetest (work);

/* create master data set */

/*data spdetest.master(compress=yes);*/

data spdetest.master(compress=yes sortedby=date id);

length other_var $10 big_var $1000;

retain other_var 'MASTER' big_var 'big';

do date= '01jul2016'd to '31jul2016'd, '01aug2016'd, '01sep2016'd;

do id=1,2,3,5, 100 to 10**6;

output;

end;

end;

format date date9.;

run;

/* create transaction data set */

data trans;

length other_var $10;

retain other_var 'TRANS';

do id=4,2, 50000 to 2000 by -1;

do date='01sep2016'd, '01oct2016'd;

output;

end;

end;

format date date9.;

run;

proc sort data=trans;

by date id;

run;

/* merge master with trans */

data spdetest.master(compress=yes sortedby=date id);

merge spdetest.master trans ;

by date id;

run;

data retrieval;

set spdetest.master;

where other_var='TRANS';

run;

proc contents data=spdetest.master;

run;quit;

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

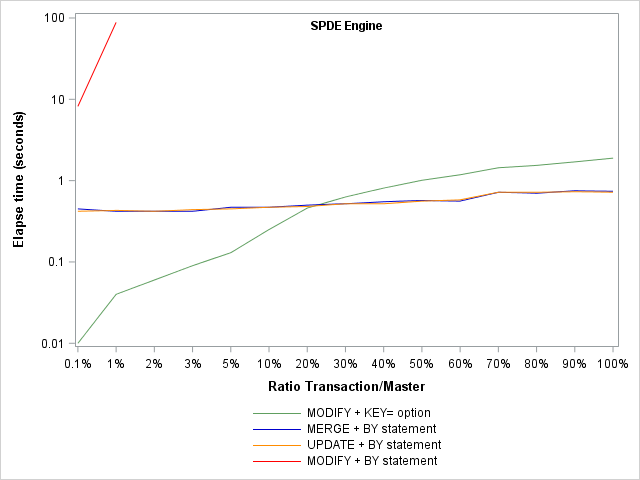

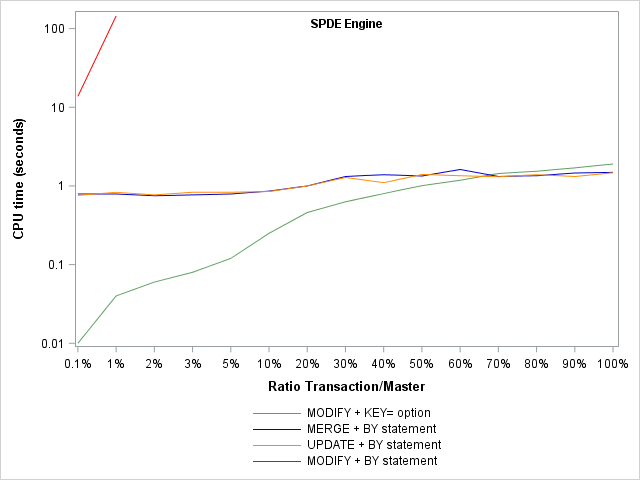

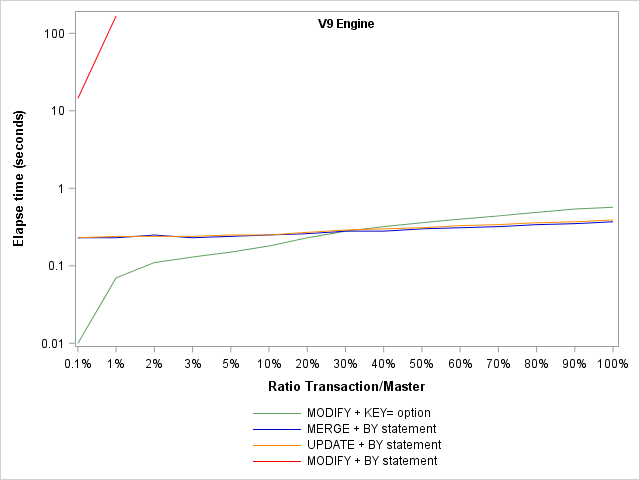

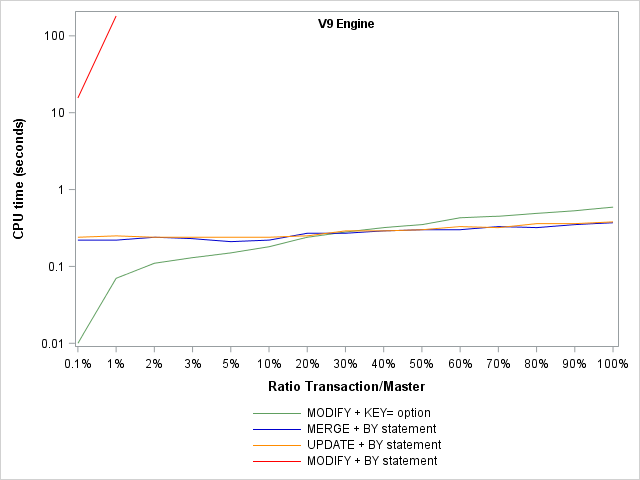

I did a quick benchmark on a small uncompressed 200,000 obs x 1024 bytes table.

I had to keep the table small if I wanted to include the MODIFY BY test results as they are so slow, even using a log scale.

CPU times and Elapse times are roughly equal on my machine as we have fast I/O on solid state storage and very average CPUs.

Conclusions (remember, conclusions are only valid for this data on this machine):

- MODIFY + BY should be avoided

- MODIFY + KEY= is the fastest until we need to update about 1/3 to 1/2 of the table. Beyond this a full table overwrite is faster.

When using SPDE, the pattern is similar.

- MERGE BY is slightly faster than UPDATE BY.

- SPDE is slower

The code is attached.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Chris,

Well done. It looks like MERGE+KEY have some edge only to update <5% for V9 engine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@KiranMaddi Modify+key= doesn't require sorting your table, which is a huge bonus if your table is not sorted yet.

If it is sorted (I think it is, right?), you'll lose the sort order by using Modify+key= since insertions will be done at the end of the table.

If you can keep the table sorted, you should, as this can optimise queries made against the table. Sadly the sort order is no longer validated after a data step (we need this! see https://communities.sas.com/t5/SASware-Ballot-Ideas/create-a-VALIDATESORT-data-set-option-to-validat...), but make sure to use the sortedby= option which is better than nothing.

If your table is not sorted, or if you don't/won't keep the sort order, then it seems that Modify+key= is the best option option indeed. But remember that my benchmark was done on different data and different hardware. Your circumstances are different and the best solution may be different for you. I gave this as an (hopefully relevant) example rather than a hard guideline.

Rewriting 160GB on my server's disks is not an issue, but it may be an issue on your machine. In this case, inserting at the end is the only option, and and index will be used to access data in a sorted manner if that's needed. If using an index to access sorted data is too slow and is also an issue, then you have to choose which issue is the most critical.

Hoping this helps...

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Many thanks for spending you valuable time on this. I have been away from work so, got a chance to look at this today. I have tested your code with my data volumes(History: 60million rows,855 variablesTrans: 40-60k rows,855 variables. I can say that using SPDE has saved me around 3-4 hours. I understand my data is huge but I am still not happy with the run times even after this savings. Do you think using Modify is the answer as it won't recreate the whole dataset?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@KiranMaddi wrote:

Hi Patrick

Many thanks for spending you valuable time on this. I have been away from work so, got a chance to look at this today. I have tested your code with my data volumes(History: 60million rows,855 variablesTrans: 40-60k rows,855 variables. I can say that using SPDE has saved me around 3-4 hours. I understand my data is huge but I am still not happy with the run times even after this savings. Do you think using Modify is the answer as it won't recreate the whole dataset?

From your numbers, I place your raw dataset size in at least the 400 GB range. So you are at a point where you have to consider the throughput of your storage as an important measure. Simply reading your data sequentially from one reasonably fast spinning-metal disk will take at least an hour.

So the question arises (if it hasn't been answered yet): how does your storage look like?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

The Master dataset is currently on 165GB. For now we seem to be OK with the storage(Almost 500GB left on this disk) We are also considering expanding the storage in the near future.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

The size of disks ist not the problem nowadays, when 2TB disks come for < 100$ on amazon.

The main criteria are data rate and access times.

Using 4 SSDs in a RAID 10 stripe/mirror set will get you throughput, extremely short access times, and reliability.

When monitoring a SAS server, you will usually find that a lot of the time is spent in I/O waits, so fast storage is the best thing to invest in.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Faster (than sata/sas) solid state storage is now very affordable.

Work storage should always use it, as well any storage requiring the highest speeds.

For example

https://www.amazon.com/gp/aw/d/B00UHJJQ3Q/ref=mp_s_a_1_5

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@ChrisNZ wrote:

Faster (than sata/sas) solid state storage is now very affordable.

Work storage should always use it, as well any storage requiring the highest speeds.

For example

https://www.amazon.com/gp/aw/d/B00UHJJQ3Q/ref=mp_s_a_1_5

Heh. With speeds like that, I'd just use 2 for mirroring, so there's no SPOF.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

A similar but slower iofusion card cost us $30k 5 years ago.

It was a hard battle to convince the bean counters.

Now there is no reason for anyone not to use these.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Actually UPDATE can replace non-missing value with missing value.

data a;

input x y;

cards;

1 2

2 4

;

run;

data b;

input x y;

cards;

2 .

3 5

;

run;

data want;

update a b updatemode=nomissingcheck;

by x;

run;- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@Patrick I like this!

It is worth remembering how

modify MASTER key=KEY;

is so fast while

modify MASTER TRANS; by KEY;

is uselessly slow.

modify MASTER key=KEY;

typically becomes slower than a full rewrite

merge MASTER TRANS; by KEY;

when the percentage of records to update reaches 20-30%.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Don't miss out on SAS Innovate - Register now for the FREE Livestream!

Can't make it to Vegas? No problem! Watch our general sessions LIVE or on-demand starting April 17th. Hear from SAS execs, best-selling author Adam Grant, Hot Ones host Sean Evans, top tech journalist Kara Swisher, AI expert Cassie Kozyrkov, and the mind-blowing dance crew iLuminate! Plus, get access to over 20 breakout sessions.

Learn how use the CAT functions in SAS to join values from multiple variables into a single value.

Find more tutorials on the SAS Users YouTube channel.

Click image to register for webinar

Click image to register for webinar

Classroom Training Available!

Select SAS Training centers are offering in-person courses. View upcoming courses for: