- Home

- /

- SAS Communities Library

- /

- Running SAS Viya in Upstream Open source Kubernetes – part 3

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Running SAS Viya in Upstream Open source Kubernetes – part 3

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In this post, we'll continue our journey toward a SAS Viya Deployment in a an "on-premise" Open Source Kubernetes cluster.

The first post discussed the scope of support, the platform requirements (calico, containerd, kube-vip, etc…) and introduced the SAS Viya 4 Infrastructure as Code (IaC) for Open Source Kubernetes project for Opensource Kubernetes.

The second one focused on the IaC tool, its specific requirements and how to configure and run it.

But if your customer has chosen to deploy Viya on-premise using an opensource Kubernetes and you are using the SAS Viya 4 Infrastructure as Code (IaC) for Open Source Kubernetes tool to prepare the cluster, you will very likely need to look under the hood, and understand how the tool is working to be in position to efficiently troubleshoot any issue.

So, in this 3rd post, we’ll give you some tips to troubleshoot the IaC tool for Opensource Kubernetes and also share some experience and challenges we faced while using the tool.

Troubleshoot the IaC tool for Open source Kubernetes

Once you have your Ansible inventory and vars file ready (as discussed in the previous post), all you have to do is to run the oss-k8s.sh script that encapsulates the ansible playbooks execution. This page in the viya4-iac-k8s project repository explains how the script can be used.

As explained in the first post, you can call the oss-k8s.sh in two ways: one to prepare the system ("oss-k8s.sh setup"), and one to install the Kubernetes software ("oss-k8s.sh install").

In case the first the "setup" command fails, for some reason, you can fix the root cause and try again running the same command (it corresponds to what we call a "fully idempotent" playbook).

However, for the "install" command there is an "uninstall" command that you need to run when you want to make a second attempt at running the Kubernetes installation. The "uninstall" command takes care of the required clean-up that needs to take place before a new try.

Troubleshoot with Ansible

The "oss-k8s.sh setup" and "oss-k8s.sh install" commands correspond to 2 distinct Ansible playbooks: playbooks/systems-install.yaml and playbooks/kubernetes-install.yaml

In case of failure, you might want to bypass the oss-k8s.sh script to run the playbook by yourself …the only thing is that you need to set the required variables.

See below an example on how to do that:

ANSIBLE_USER=cloud-user

ANSIBLE_PASSWORD=lnxsas

BASEDIR="/home/cloud-user/viya4-iac-k8s"

SYSTEM="bare_metal"

ANSIBLE_INVENTORY="inventory"

ANSIBLE_VARS="ansible-vars.yaml"

IAC_TOOLING="terraform" # default value - although we don't use TF for Baremetal as the opposite to "docker"

# install pre-requisites

ansible-galaxy collection install -r "$BASEDIR/requirements.yaml" -f

cd $BASEDIR

# "oss-k8.sh setup" step by step

ansible-playbook -i $ANSIBLE_INVENTORY --extra-vars "deployment_type=$SYSTEM" --extra-vars @$ANSIBLE_VARS playbooks/systems-install.yaml --flush-cache --tags install --step

# "oss-k8.sh install" from a given task

ansible-playbook -i $ANSIBLE_INVENTORY --extra-vars "deployment_type=$SYSTEM" --extra-vars "iac_tooling=$IAC_TOOLING" --extra-vars "iac_inventory_dir=$BASEDIR" --extra-vars "k8s_tool_base"=$BASEDIR --extra-vars @$ANSIBLE_VARS $BASEDIR/playbooks/kubernetes-install.yaml --flush-cache --tags install --start-at-task "Register Git HASH information"Once you know how to run the tool from the ansible command line, you can use all use your favorite ansible "troubleshooting tricks"!

Such as increasing the ansible log verbosity (-vvvv), run the task one by one (with “--step”) or recover from a failure by running it from a specific checkpoint (“--start-at-task”).

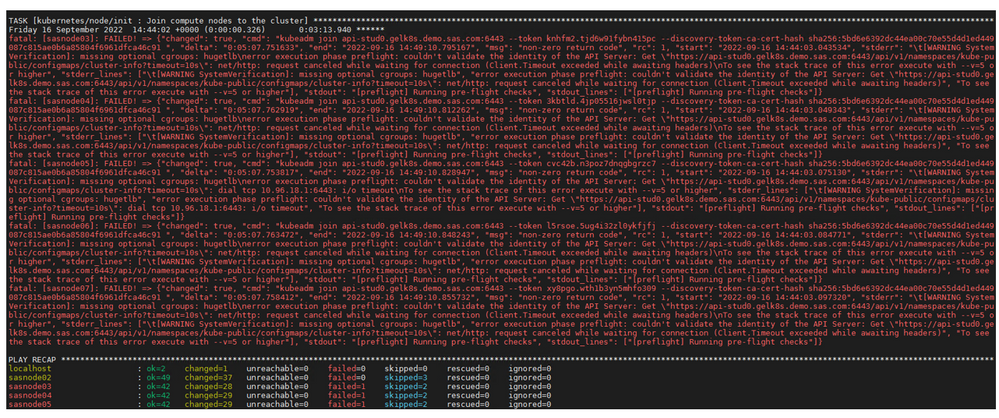

Finally, if just like me, you are struggling to read ansible error message when displayed during a playbook failure, like in the screenshot below:

Select any image to see a larger version.

Mobile users: To view the images, select the "Full" version at the bottom of the page.

then you can use the trick that I’m sharing here (that is not something new but remains pretty useful when it comes to ansible troubleshooting).

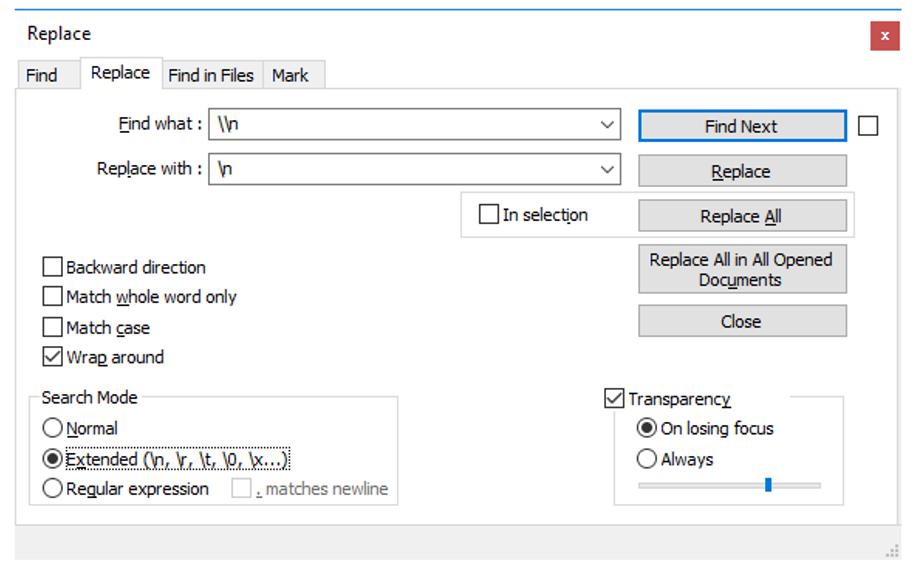

If you have Notepad++, just copy/paste the ansible output and perform a global replacement as shown below (don’t forget to click on "Extended").

And it should you the error message in a much more readable form. 😊

Troubleshoot a "Kubeadm init" failure.

The bulk of the work performed by the IaC tool is the installation of the open-source Kubernetes software itself on our nodes. Kubeadm (which is one of the official tools referenced in the opensource K8s project) is used for that purpose and it happens in the "kubeadm init" task.

If the task fails, then you will probably have an error message that tells you to look at the kubelet log or in the containers logs.

The kubelet is an agent that runs on each node of a Kubernetes cluster to perform various tasks (register the node, monitor the pods resource utilization, etc…).

So, you’ll have to jump on your control plane machine (there can be one or three) and inspect the kubelet service.

There are a few ways to see the kubelet service logs:

sudo systemctl status kubelet -l

sudo journalctl -xeu kubelet | less

You might also need to look in the containers logs that are stored in /var/log/pods/<pod ID>. For example, the API Server log can be found in /var/log/pods/<api_server pod ID>/kube-apiserver

If the Kubernetes API server has started (in spite of the task failure and many error messages in the kubelet logs) you could also try to connect to your cluster.

The KUBECONFIG file would not have been generated yet, but you can find one on the one of the master node (/etc/kubernetes/admin.conf) and use it to get access to the cluster (for example with Lens or the kubectl command) and assess the issue.

If you need to run some commands to inspect the running containers or associated images, you can use the crictl tool (which is installed by the IaC and offers a long list of commands to interact with the containers).

sudo crictl ps

The network configuration needs to define exactly as expected for the "kubeadm init" task to be successful.

So, you might want to make sure that the floating IP that was defined during the IaC configuration (kubernetes_vip_ip) has been attached to your network interface and also ensure that the DNS name that you have associated to it (kubernetes_vip_loadbalanced_dns) is working.

# jump on one of the control plane node

ssh <control plane node>

# show the IP addresses attached to the network interface

sudo ip addr show eth0

# ensure the DNS name (kubernetes_vip_loadbalanced_dns)

# is associated to the floating IP (kubernetes_vip_ip)

nslookup osk-api.viya.mycompany.com

First experience feedback

While working on new hands-on of the Viya 4 Deployment workshop VLE (available soon 😊), to provide the opportunity to SAS employees to try out this new IaC tool (and setup their own opensource Kubernetes cluster on a bunch of RACE machines), I faced several challenges issues and learned a few things. I’ll share some of them here 😊

Topology traps

As we’ve seen, if you are ok to deploy a less available and scalable K8s cluster, you can really reduce the number of required machines. Some "non-kubernetes" roles such as "jumphost" or "NFS" can also be placed on hosts playing the role of Kubernetes nodes, they don’t need to be on dedicated machines.

However, be careful, there might be some incompatible combinations!

As an example, you can’t place jumphost and NFS roles on the same node (it would trigger problems with the NFS share mount that is configured on the jumphost).

The reboot issue

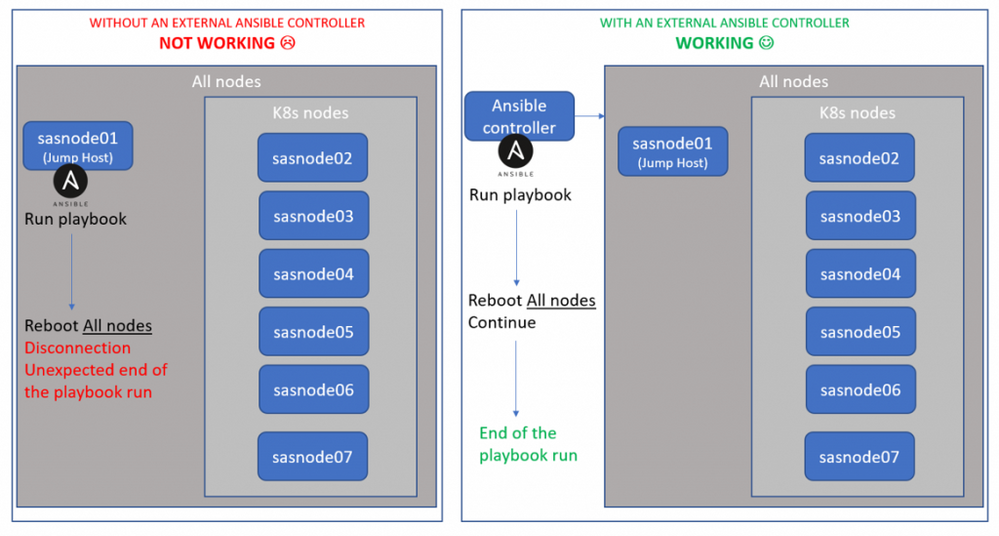

During the playbook run, there is an ansible task to reboot all the nodes defined in the inventory, in order to force the OS to pick up some low-level system changes (like the GRUB configuration change).

But if you were using the "jumphost" provisioned machine as your ansible controller, then you get automatically disconnected in the middle of the playbook execution!!! (Since ansible forces the machine from where your run the playbook to reboot). And obviously, the rest of the playbook tasks cannot be executed.

One way to avoid this trap is to run the ansible playbook from another external machine (outside of the hosts defined in the Ansible inventory) and, as such, is not affected by the "reboot all nodes" task.

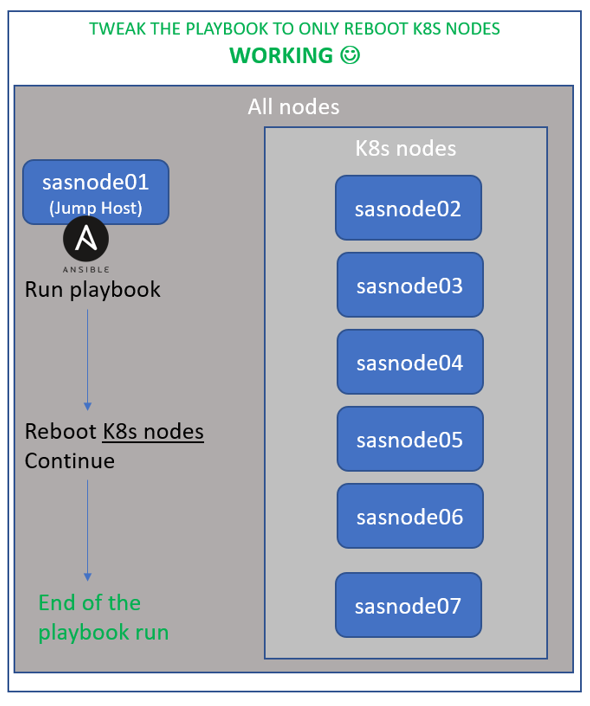

The two scenarios are represented in the schema below.

However, it means that we need yet another machine in addition of the ones we have define in the inventory...

There is a trick to avoid this additional machine requirement, though in this kind of topology.

The reboot is only really required on the Kubernetes node, so they can pick up the cgroup directives defined in the GRUB configuration. A reboot is not required for the Jump Host machine.

So, to avoid the need of another external ansible controller, we can just slightly modify the playbook to make the "reboot" task only happen on the Kubernetes nodes 😊 (instead of all the nodes).

Here’s an example on how to automatically modify the original playbook, for that:

# Override the kubernetes-install.yaml playbook to only reboot K8s nodes

sed -i '/- hosts: all/c\- hosts: k8s' ~/viya4-iac-k8s/playbooks/kubernetes-install.yaml

This 3rd scenario is represented below:

Note that this issue was reported here

Kubeadm requirements

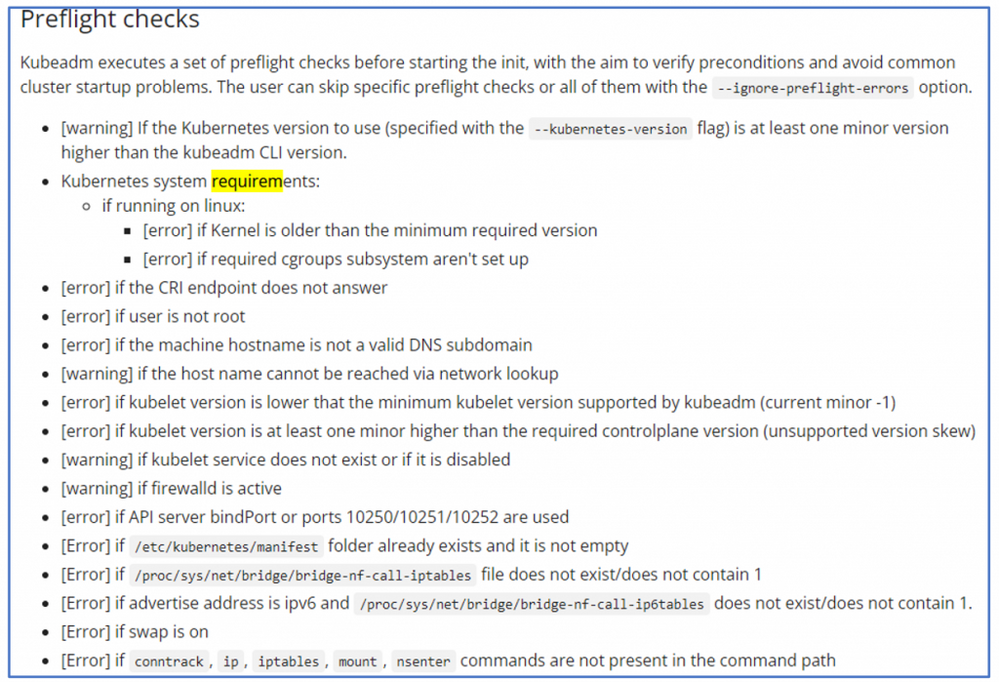

The IaC tool relies on Kubeadm to install Kubernetes.

Kubeadm is defined in the official Kubernetes documentation as "a tool built to provide (…) fast paths for creating Kubernetes clusters".

Kubeadm also has its own requirements and performs a series of “pre-flight” checks and will fail if any of the requirement is not fulfilled.

The Kubeadm requirements are not always explicitly repeated in the IaC documentation. As an example, the system swap is expected to be disabled on the linux hosts. If it is not the case, then the IaC tool fails (this issue has been reported here).

If you want an automated way to disable the swap on your Kubernetes nodes before running the IaC tool, you can use the snippet below (double check your indentation if you copy the code from here).

bash -c "cat << EOF > ~/viya4-iac-k8s/disable-swap.yaml

---

- hosts: k8s

tasks:

- name: Disable SWAP since kubernetes can't work with swap enabled (1/2)

shell: |

swapoff -a

- name: Disable SWAP in fstab since kubernetes can't work with swap enabled (2/2)

replace:

path: /etc/fstab

regexp: '^([^#].*?\sswap\s+sw\s+.*)$'

replace: '# \1'

EOF"

cd ~/viya4-iac-k8s

ansible-playbook -i inventory disable-swap.yaml -b

If you face an issue with the IaC tool, you should do the following:

- Try to troubleshoot the issue by yourself

- Check in the repository issue list to see if it is a known issue

- If you are still stuck, create a new GitHub issue in the IaC repository

Conclusion

As you may already have realized (especially if you’ve read the whole post series), deploying your own Kubernetes cluster (even with the IAC tool) might not be so easy and seamless.

Given the complexity of the infrastructure requirements (especially in terms of network and storage), the inherent sophistication of Kubernetes itself, and the variety of environment’s infrastructures it is not really such a big surprise…

That is mainly why we should strongly encourage organizations choosing the "on-prem" Open-source Kubernetes platform for Viya, to ensure they have the Kubernetes and container technologies knowledge and skills "in house".

Having a good experience and knowledge of the linux system and Ansible will also be of a great help in order to provision the cluster with the IaC tool and hopefully this post also provides some useful tips for a successful implementation.

In our next and last post of the series, we’ll finally talk about the SAS Viya software deployment itself with a zoom on the SAS Viya 4 Deployment GitHub project (also known as “DaC” for Deployment as Code).

Thanks for reading and for your comments!

Find more articles from SAS Global Enablement and Learning here.

Register Today!

Join us for SAS Innovate 2025, our biggest and most exciting global event of the year, in Orlando, FL, from May 6-9. Sign up by March 14 for just $795.

Free course: Data Literacy Essentials

Data Literacy is for all, even absolute beginners. Jump on board with this free e-learning and boost your career prospects.

Get Started

- Find more articles tagged with:

- GEL