- Home

- /

- SAS Communities Library

- /

- A sidecar logging proxy solution for the SAS Container Runtime (SCR)

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

A sidecar logging proxy solution for the SAS Container Runtime (SCR)

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

SAS Container Runtime (SCR) provides a way to publish your analytical models to any environment which is able to run OCI compliant Docker containers. For example, you could deploy a model to a managed cloud service such as AWS Elastic Container Service (ECS) or Azure Container Apps or to just any Kubernetes cluster running either in the cloud or on-premises. The SCR images generated by SAS Model Manager are free from any external dependencies (except from requiring a container runtime environment of course) and can be accessed via standardized REST API calls.

While there is a lot of documentation describing the process of building and deploying a SCR model container (see here and here for blogs discussing a ModelOps approach), there is not much material discussing what happens after you’ve successfully deployed your model. Which is, after all, just one step in your model’s lifecycle and not the end of it.

In this blog I’d like to focus on how to enable auditing and model governance specifically for models deployed as SCR images. The main challenge with this task is that you often need a way to trace your model’s actions and decisions. Let’s see how this can be achieved …

A little bit of background

It has probably become common knowledge in the analytics space that deploying a model to a production environment is not the final state in the model’s lifecycle. Rather, it’s crucial to keep track of the model’s performance over time to detect when the accuracy of the predictions it generates have degraded below a threshold where you want to retrain or even retire the model. Besides of monitoring the model drift, you might have other reasons to track your model as well. For example, you might be required to store a record of all decisions the model has taken for auditing purposes. Note that in both cases you probably need the full record, i.e. the metadata (who called at which time etc.) and the data (which input data was sent and which probability score was returned).

If you’re in the lucky situation of having full control over the score client which communicates with the model container, you could simply log all scoring requests and responses on the client side. But what if this is not the case? If you need to do the logging on the server side? Well, as long as you’re within the SAS infrastructure, this isn’t a challenging task – it’s a built-in feature of the publishing destinations for CAS and MAS (note that you have to perform a custom configuration step to enable this feature for MAS).

However, the picture changes once you leave the SAS-managed infrastructure: a SCR model container is fully autonomous and can be deployed to environments without having any other SAS platform component within reach. Even “worse” (so to say): the model container is stateless, which means that it’s not writing any information to a persistent volume for example.

As a DevOps person, you certainly could follow your primal instincts and crack the container image open to add your own layers to it, but that’s not exactly a recommended approach (to put it nicely). Luckily there are better solutions available. Let’s discuss a simple approach first, followed by a more sophisticated one.

A built-in solution: SAS_SCR_LOG_LEVEL_SCR_IO

Being quite a mouthful, SAS_SCR_LOG_LEVEL_SCR_IO is one of the SCR core loggers. Out-of-the-box it’s more or less muted but can be enabled by setting the appropriate log level. When set to TRACE, it will make the model container log the input and output values for every request it receives.

For Kubernetes, this logger is activated by setting an environment variable in the Pod manifest, for example like this (abbreviated for clarity):

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-scr-scoring-pod

spec:

template:

spec:

containers:

- name: my-scr-scoring-model

env:

- name: SAS_SCR_LOG_LEVEL_SCR_IO

value: TRACE

Once activated, your container will start printing out lines like this one to the standard Kubernetes log (abbreviated and formatted for clarity):

2023-11-06 17:21:06.724 DEBUG --- [nio-8080-exec-4]

SCR_IO stud01_steel score : Executed module step my_model->score: {

"version" : 1,

"inputs" : [

{ "name" : "X_Minimum", "value" : 1498 },

{ "name" : "X_Maximum", "value" : 1150 },

(...)

]

} -> {

"metadata" : { "module_id" : "my_model", ... },

"version" : 1,

"outputs" : [

{ "name" : "EM_EVENTPROBABILITY", "value" : 0.15030613001686055 },

{ "name" : "EM_CLASSIFICATION", "value" : " 0" },

(...)

]

}

As you can see, you’ll get one line for each scoring request which the container has received, along with a timestamp and the generated response (probability). While this is basically all you need, it comes with potential disadvantages which might prevent you from using this approach. One important gotcha to consider is that you might disclose sensitive information to a log which is not under your control: the information is collected and processed by a cluster-wide logging subsystem and might be surfaced at places you don’t want it to appear (say, a web app like Kibana/OpenSearch etc.). Additionally, you still need to find a way to send back that information to SAS, as you need to ingest it for tracking your model’s performance.

Introducing the sidecar container pattern

Let’s take a look at the second approach. On Kubernetes, our models will be deployed as Pods, which is a wrapper object for one or more containers. By default, the model runtime will be the only container of the model Pod, but that’s not carved in stone. We can add a sidecar container running a proxy web server which can “intercept” the traffic directed to the model container. This allows us to capture the inbound and outbound data as it passes by and write it to a log on a shared volume. So we’re bypassing the standard Kubernetes logging facility - but only for the information we’re interested in.

The use of “sidecar” containers is a common deployment pattern which has been around since the early days of Kubernetes. The main purpose of a sidecar is to add supporting features to the main container in a pod without the need of modifying it. By using sidecars, you can avoid having to rebuild your main container when it lacks a feature you need.

The sidecar container(s) and the main container share the pod storage and network, which means that a sidecar can use “localhost” (127.0.0.1) to communicate with the main container. Thanks to this close relationship, sidecar containers are quite often used as proxies of some kind and this is exactly the very reason why we’re using them in this blog.

A custom solution: the web proxy server sidecar

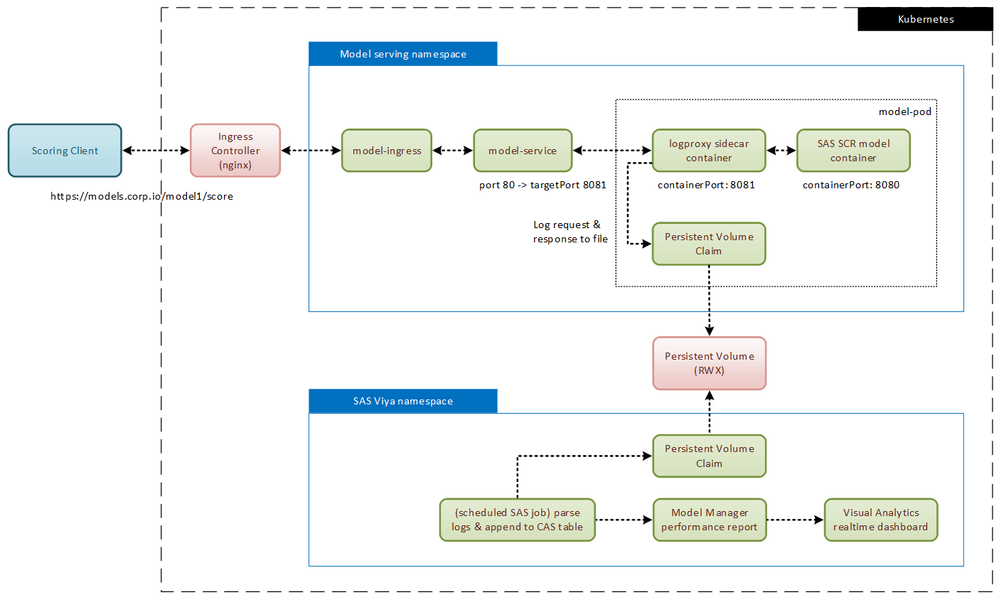

Take a look at this diagram to better understand the basic idea:

Starting on the top left, there’s a client submitting a scoring request to a model instance deployed as SCR. As you can see, there are actually 2 containers deployed as one “model-pod”: the “logproxy” sidecar and the main model container, i.e. their manifest roughly looks like this:

apiVersion: apps/v1

kind: Deployment

spec:

template:

spec:

volumes:

- name: shared-volume

(...)

containers:

- name: logproxy-sidecar

image: ...

ports:

- containerPort: 8081

volumeMounts:

- name: shared-volume

(...)

- name: score-app-main

image: ...

ports:

- containerPort: 8080

(BTW – I have attached the full deployment manifests as a ZIP file to this blog). The service object in front of the pod redirects incoming traffic to port 8081 which is the port of the sidecar container.

apiVersion: v1

kind: Service

spec:

ports:

- name: http

protocol: TCP

port: 80

targetPort: 8081

The sidecar container (which is basically just a web server configured as a proxy) passes the request to the main model container using port 8080. Once the calculation is done, the response is sent back to the client on the same route, giving the sidecar a chance to write out a log record to the persistent volume.

Obviously, this persistent volume should be shared with SAS Viya so that you can set up a scheduled SAS compute job which will read the log records and add them to a CAS table for example (maybe for building a custom Visual Analytics dashboard or for feeding the model performance report in SAS Model Manager).

With the basic flow covered, let’s take a deeper look at that mysterious “logproxy” thingy …

The logproxy configuration

I hope you won’t be disappointed when learning that the sidecar container (in this blog at least) is a simple nginx server with the Lua scripting module enabled. Sorry, no magic here …

You might already be familiar with the nginx web server as it is commonly used as the default ingress controller for many Kubernetes distributions. It’s easy to package nginx into a container image and it’s pretty lightweight as well. The nginx functionality can be extended and the Lua module is the usual approach for embedding scripting capabilities to nginx. If you’re not familiar with Lua – don’t worry too much: the snippets which you need to understand are fairly simple. They are needed for transforming the request and response data into a clean output format which can be easily consumed later (by SAS for example).

It's not required (but certainly possible) to build the Nginx+Lua container image from scratch. There are a couple of open-source solutions available to choose from. For example, I tested the openresty and the nginx-lua images and they both work nicely. In the end, I decided to use the nginx-lua image as it allowed me to run the sidecar as a non-root user, but the decision is of course up to you.

I also decided that I want the sidecar to generate the log records in the JSON format, so my code snippets are written for that purpose. The log output generated by the sidecar is supposed to look like that (as usual, abbreviated and formatted for clarity):

{

"timestamp": "29/Nov/2023:10:23:58 +0000",

"req_id": "805054d675e19c17d31b1b04056b7351",

"request": {

"inputs": [

{ "name": "CLAGE", "value": 94.36666667 },

{ "name": "CLNO", "value": 9 },

(...)

]

},

"response": {

"metadata": {

"module_id": "hmeq_score",

"elapsed_nanos": 513119,

"step_id": "score",

"timestamp": "2023-11-29T10:23:58.131916257Z"

},

"version": 1,

"outputs": [

{ "name": "P_BAD1", "value": 0.6131944552855646 },

{ "name": "EM_PROBABILITY", "value": 0.6131944552855646 },

(...)

]

}

}

You can easily see the input and output data along with some metadata like a unique request ID, the model name and more. In order to create this output, some Lua code is required which can be executed by nginx for each scoring request. These code snippets can be stored in a custom configuration file which is read by nginx during startup. I used the following snippet for generating the above log records:

log_format scorelog escape=none '{ '

'"timestamp":"$time_local", '

'"req_id": "$request_id", '

'"request": $req_body, '

'"response": $resp_body '

'},';

upstream webapp {

server 127.0.0.1:8080;

}

server {

listen 8081;

location /score {

set $req_body '';

set $resp_body '';

access_by_lua_block {

ngx.req.read_body()

ngx.var.req_body = ngx.req.get_body_data() or "{}"

if not ngx.var.req_body:match "^{" then ngx.var.req_body = "{}" end

}

body_filter_by_lua_block {

ngx.var.resp_body = (ngx.var.resp_body or "") .. ngx.arg[1]

}

proxy_pass http://webapp;

proxy_redirect off;

access_log /var/log/nginx/shared-data/model-logs/log-$server_addr.json scorelog;

}

}

I can’t discuss all the details here, so I’ll focus on 3 important points:

- access_by_lua_block and body_filter_by_lua_block are predefined “directives” you can use to execute code at a specific point in time. access_by_lua_block is called first to process the incoming request, followed by body_filter_by_lua_block which is called when nginx has access to the response returned by the main model container (see here for a more detailed explanation).

- Note the definition of the upstream webapp as 127.0.0.1:8080 – this is actually the port where the main model container listens to. As said above, a sidecar shares the pod storage and network, so it can just use “localhost” (127.0.0.1) to reach the container “next door”.

- Finally note the output storage location: /var/log/nginx/shared-data/model-logs is the mount point I’m using for the persistent volume. I’m also adding the IP address of the model container to the file name: log-$server_addr.json. The reason for this is that attributes such as the model ID or the date would not be unique in case you have deployed multiple replicas of the SCR pod.

Closing the loop – if this configuration is stored in a ConfigMap, you can “mount” it to the logproxy sidecar to make it available to the nginx server:

apiVersion: v1

kind: ConfigMap

metadata:

name: scoreapp-logproxy-nginx-conf

namespace: default

data:

nginx-logproxy.conf: |-

log_format scorelog escape=none '{ '

'"timestamp":"$time_local", '

'"req_id": "$request_id", '

(...)

---

apiVersion: apps/v1

kind: Deployment

spec:

template:

spec:

volumes:

- name: nginx-proxy-config

configMap:

name: scoreapp-logproxy-nginx-conf

containers:

- name: logproxy-sidecar

volumeMounts:

- name: nginx-proxy-config

mountPath: /etc/nginx/conf.d/nginx-logproxy.conf

subPath: nginx-logproxy.conf

(...)

Again, remember that I have attached the complete manifests to this blog.

Conclusion

Before closing this blog, I’d like to mention a caveat concerning log rotation. In Kubernetes you’re usually not bothered with this because it is handled for you automatically as long as the pods are using the default stdout destination for their log output.

Unfortunately, that’s not the case with the sidecar container, so there might be a risk that the log files exceed the available disk space at some point. If you choose nginx as your web proxy (like I did in this blog), you should be aware that it doesn’t have native log rotation capabilities and relies on external tools like logrotate, which might not be available in the nginx docker image you’re using. In other words: you might need to add another sidecar container with logrotate to manage your log files volume.

I hope you liked the blog and I’m curious to hear your feedback. Please feel to send in your thoughts and comments!

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thank you. This type of customization is what we hoped to see by creating the OCI SCR. Good work.

Available on demand!

Missed SAS Innovate Las Vegas? Watch all the action for free! View the keynotes, general sessions and 22 breakouts on demand.

Free course: Data Literacy Essentials

Data Literacy is for all, even absolute beginners. Jump on board with this free e-learning and boost your career prospects.